An employee of xAI inadvertently published a private API key on GitHub, which, for two months, granted access to internal language models trained on data sourced from SpaceX, Tesla, and X (formerly Twitter). The breach was first discovered and disclosed by a Seralys employee, who shared the details on LinkedIn.

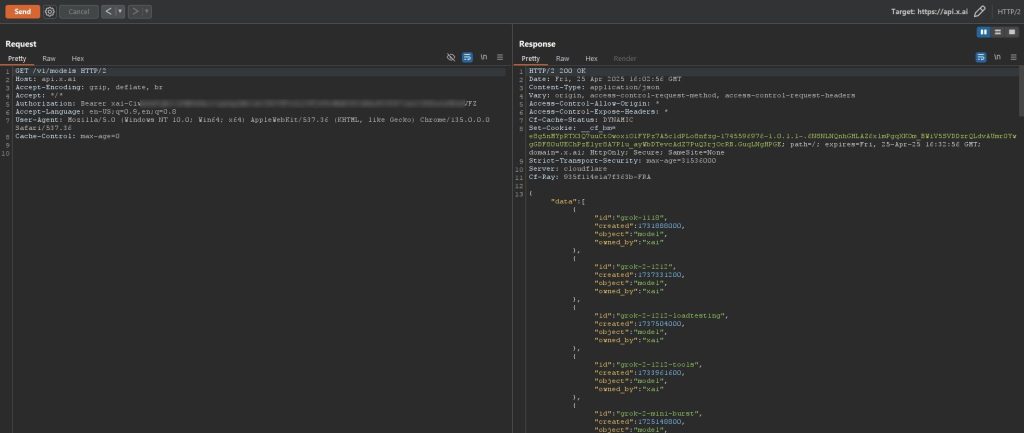

The incident swiftly drew the attention of GitGuardian—a company specializing in the detection of exposed keys and secrets within code repositories. Their analysis revealed that the leaked key provided access to at least 60 proprietary and customized language models, including unreleased versions of Grok, xAI’s own chatbot. Among the compromised models were those bearing explicit references to SpaceX and Tesla, such as “grok-spacex-2024-11-04” and “tweet-rejector.”

According to GitGuardian, the owner of the key was initially notified on March 2nd, yet the credential remained active until the end of April. Only after a direct appeal to xAI’s security team on April 30th was the key finally revoked and the corresponding GitHub repository taken down. Until that moment, anyone in possession of the key had unrestricted access to xAI’s private models and API, under the guise of a company insider.

GitGuardian emphasized the severe risks posed by this leak. With backend access to Grok, malicious actors could have executed prompt injection attacks, manipulated model behavior for nefarious purposes, or embedded malicious code into the supply chain.

Amid the fallout, additional concerning revelations have emerged. The Department of Government Efficiency (DOGE) has reportedly begun integrating AI systems into various U.S. government frameworks. Investigations suggest the DOGE team had been feeding budgetary data from the U.S. Department of Education into an AI system for analysis, with intentions to expand this methodology across other agencies. Moreover, over 1,500 federal employees have been granted access to GSAi, an internal chatbot developed by DOGE.

While xAI insists that the leaked key did not expose user data or sensitive government information, experts remain skeptical. Given that the models were trained on proprietary corporate datasets, there remains the potential for fragments of confidential material related to internal projects at xAI, Twitter, or SpaceX to have been inadvertently embedded within them.