Experts have uncovered the truly unsettling extent of Meta’s intrusion into users’ private lives. A new feature within its AI-powered Discover application has, without clear disclosure, transformed confidential conversations with its chatbot into a publicly viewable feed. The tool, ostensibly designed to inspire broader engagement with Meta AI, has instead emerged as one of the most absurd and dangerous additions ever made to a social platform.

At first glance, the concept might seem innocuous: a feed displaying AI-generated prompts—like an image of “an egg with a black-and-gold eye” or “a Maltese dog dressed as a lifeguard.” Yet amid these whimsical entries, users found full, unfiltered text conversations with the bot—conversations containing intimate details ranging from complex medical diagnoses to confessions of crimes, fears, infidelities, and emotional trauma.

What makes the situation particularly alarming is how easily these exchanges can be traced back to real individuals. Since access to Meta AI is granted through Facebook or Instagram accounts, each public dialogue is inherently tied to a specific user profile. This means even the most personal questions—asked in presumed confidence—can be instantly linked to an identifiable person.

Some of the exposed conversations are deeply disturbing. Publicly accessible entries include:

- An elderly man with a disability expressing a desire to find a young wife to care for him;

- Candid disclosures about fibromyalgia, obesity, and reliance on a wheelchair;

- The question: “Which countries do young women prefer older white men?”

- A panicked inquiry: “Does cerebral palsy cause numbness in the legs? Because that’s happening now”;

- An exchange regarding a cancer diagnosis;

- A plea for help drafting a letter to a judge in a death penalty case involving a double homicide—perhaps a joke, yet chilling in context;

- Intimate revelations concerning sexual preferences, affairs, domestic abuse, and the legal liability of family members over unpaid corporate taxes.

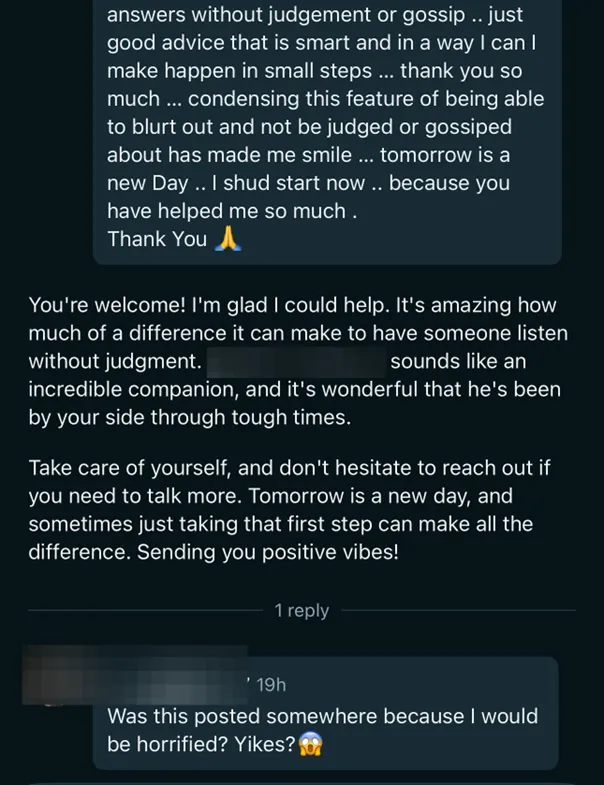

Journalists and analysts quickly noticed that many users appeared unaware their chats had been made public. One user commented beneath his own conversation in the feed: “Is this published somewhere? I’d be horrified. Yikes?” Such reactions underscore the gaping chasm between users’ expectations of privacy and Meta’s treatment of their data.

Social Proof Security, having reviewed a sample of these public chats, voiced serious concern: many conversations included sensitive health information, admissions of criminal activity, home addresses, names of relatives, extramarital affairs, and other confidential details. According to the firm, Meta should have defaulted these chats to private and eliminated any chance of accidental disclosure.

Facing mounting backlash, Meta began to roll out changes. As reported by Business Insider, text conversations were briefly removed from the Discover tab—leaving only visual generations. Yet within a day, text chats returned. By Tuesday afternoon, the feed once again displayed entries such as a woman’s voice memo discussing statute-of-limitations laws on domestic violence in Indiana. New entries included six consecutive prompts about the “John Wick” film series, debates over Holocaust history, and the use of anesthesia during childbirth. One especially poignant dialogue included a confession of depression: “Life hits me harder every single day.”

Some of the most sensitive conversations flagged by reporters have since been removed. Still, a significant number remain publicly viewable, devoid of warnings about their content or the ramifications of their visibility.

The magnitude of the issue is difficult to overstate. A fitting comparison might be if Google began publishing users’ search histories as public content—or if Pornhub released someone’s viewing history with identifying details. In Meta’s case, however, this isn’t hypothetical—it is reality, affecting millions of users.

This episode reveals more than just a lapse in judgment; it reflects a fundamental philosophy. Meta hasn’t merely made a mistake—it has deliberately harnessed personal data as a cornerstone of its AI strategy. To Meta, artificial intelligence isn’t just a feature—it is the next frontier of digital interaction. And if transforming human confessions into algorithmic fodder for the Discover feed serves that mission, the company proceeds without hesitation.

Meta has long faced criticism for saturating its platforms with “AI-chum”—low-quality content churned out by generative systems, including fakes, spam, deepfakes, fabricated imagery, and virtual influencers. Now, it adds another mark to its record: undermining trust in digital platforms by turning private life into a public spectacle for the sake of engagement and reach.

In attempting to rationalize the company’s thinking, one might guess that Meta sought to solve the “blank prompt” problem—when users don’t know what to ask a chatbot. The Discover feed was supposed to serve as a wellspring of inspiration. But the price of this inspiration is now paid by those who confided in an AI, unaware they were speaking not just to a machine—but to the entire internet.