Announced at Samsung’s launch event earlier this January, the feature that allows users to capture scenes using their smartphone camera and receive contextual responses powered by Gemini AI is now being gradually rolled out to users.

This capability forms part of Project Astra, the universal AI assistant technology unveiled at Google I/O 2024. It enables Gemini to “see” what’s displayed on the screen and intelligently respond to questions related to the visible content.

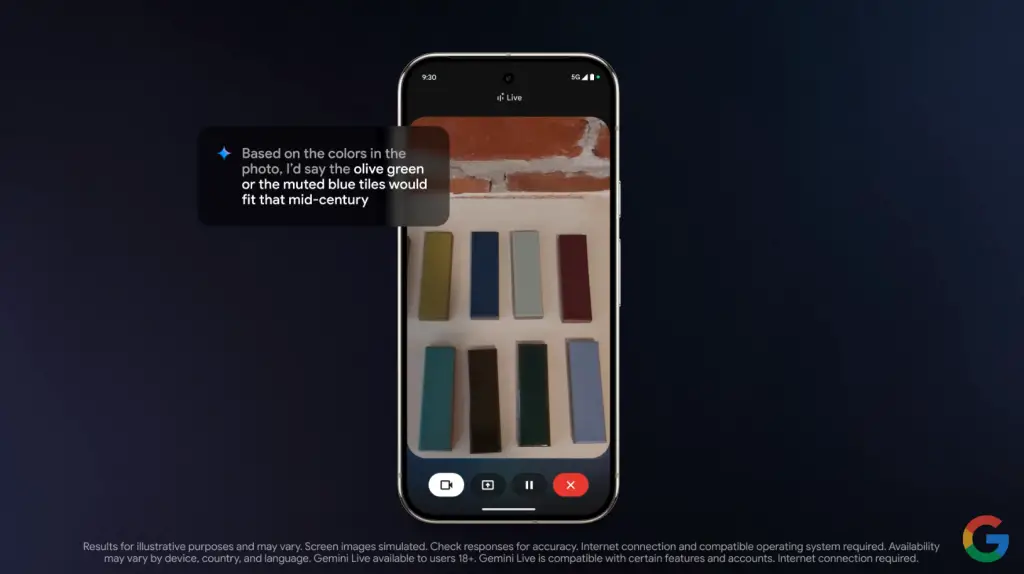

A complementary feature allows users to use their phone’s camera to capture real-world visuals, with Gemini providing real-time answers based on the observed imagery.

Initially, access to this feature will be granted to subscribers of the Google One AI Premium service or those enrolled in the Gemini Advanced subscription plan.

In addition to Google’s offering, similar functionalities have been introduced by Amazon through its AI-enhanced Alexa+ platform, as well as Apple’s upcoming Siri, integrated with advanced AI and showcased during WWDC 2024. However, Google has clearly taken the lead by bringing its capabilities to market first, and is expected to further expand its user base through its own Pixel smartphone lineup and the widely adopted Samsung Galaxy brand across global markets.