Online fraud is entering a new and more insidious era: modern scammers have learned to wield deepfake technology not merely for pre-recorded videos, but for live, real-time interactions. Armed with a laptop, webcam, and specialized software, they can now fully alter their appearance during video calls—deceiving their victims with unprecedented ease and realism.

One documented case features an elderly white man with a silver beard engaging in a warm conversation via Skype with an older woman. He compliments her, jokes about sending a security guard to shield her from admirers. She smiles, unaware that the person on the other side is, in fact, a young Black man employing real-time facial-masking software. The interaction appears so authentic that even minute details—blinking, lip movement, and facial expressions—fail to betray the illusion.

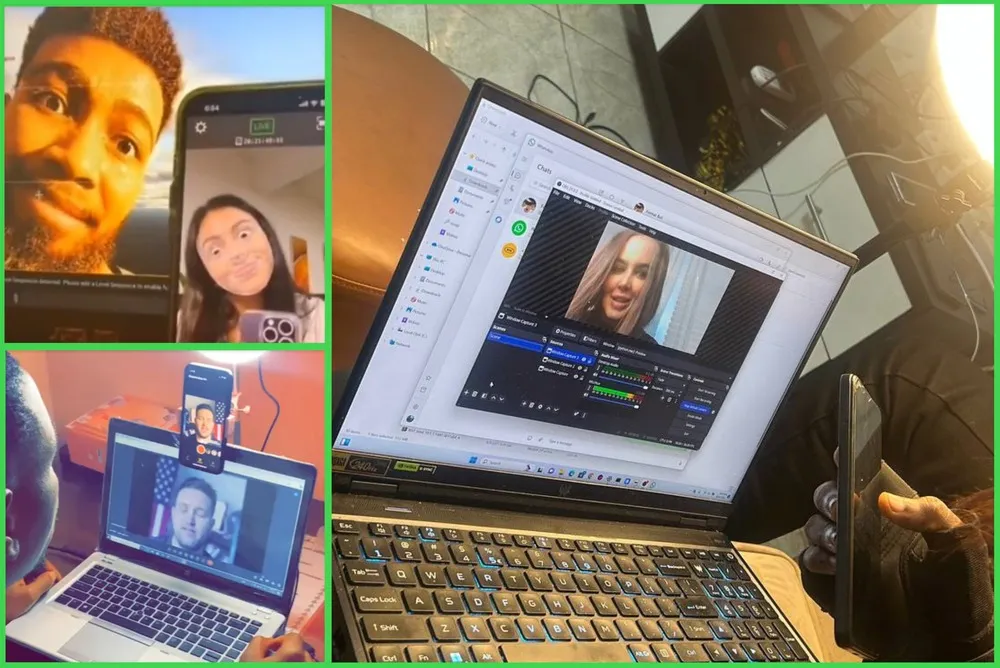

These deepfake disguises work by overlaying a pre-constructed image onto a live video stream. Modern tools now allow scammers to instantly transform into elderly individuals, women, or men of any race and age. By sourcing photos from IDs, dating profiles, or social media accounts, they craft strikingly realistic personas for online interaction.

Such technology poses a particular threat in romance scams. Fraudsters cultivate emotional dependence by forging relationships with victims, then begin soliciting money under various pretexts—travel costs, medical emergencies, or urgent needs. Thanks to deepfakes, even a video call no longer guarantees early detection of deception.

Scammers make use of accessible tools: applications such as Amigo AI and Magicam enable real-time facial replacement using uploaded photographs. To enhance quality, they employ screen mirroring technologies, OBS Studio to create virtual webcams, and plugins like NDI Tools. A ring light dramatically improves lighting, making the deepfake even more convincing.

Previously, real-time deepfakes looked crude—jerky movements, primitive facial expressions. But in 2024–2025, quality has surged. Telegram channels dedicated to fraud now circulate videos showcasing live conversations in which scammers not only smile and blink but hold objects naturally, wave to the camera, and maintain the illusion with unsettling precision.

Beyond romance scams, deepfakes are increasingly used to bypass identity verification processes at banks and cryptocurrency exchanges. Some fraudsters forge documents, generate faces using AI systems such as ChatGPT, and pass “liveness” checks to open accounts or claim tax refunds.

According to anti-fraud firm SentiLink, the number of such incidents has risen markedly over the past year. One example involved a deepfaked face successfully passing verification on CashApp, a popular U.S. payment platform. Despite anti-spoofing algorithms, current systems remain ill-equipped to counter such advanced deception.

Fake identities are not limited to financial fraud. Certain criminal groups use deepfakes for political manipulation, spreading disinformation, or disrupting corporate operations.

Platforms that develop deepfake tools are beginning to acknowledge the growing problem. Representatives from Magicam told reporters they had not anticipated fraudulent uses of their software and are now exploring ways to curb abuse. However, many tools still lack built-in safeguards or mechanisms to detect suspicious behavior.

Meanwhile, a prominent figure within the fraud community known as Format Boy is actively teaching others how to create deepfakes. He runs Telegram channels, uploads instructional videos on YouTube, and openly promises to turn his followers into “millionaires” by sharing step-by-step guides on hardware and software setup.

Experts note that real-time deepfakes are currently most often deployed in romance scams or for illicit tax refunds. Yet as the technology evolves and becomes more accessible, a rise in attacks targeting financial institutions, government services, and corporate platforms appears inevitable.

The future of combating such threats lies in advancing real-time deepfake detection, strengthening identity verification procedures, and ensuring regulators adapt to this new reality. Until then, heightened public awareness, vigilance during video calls, and a healthy dose of skepticism—even toward the most charming digital interlocutors—remain our best defense.