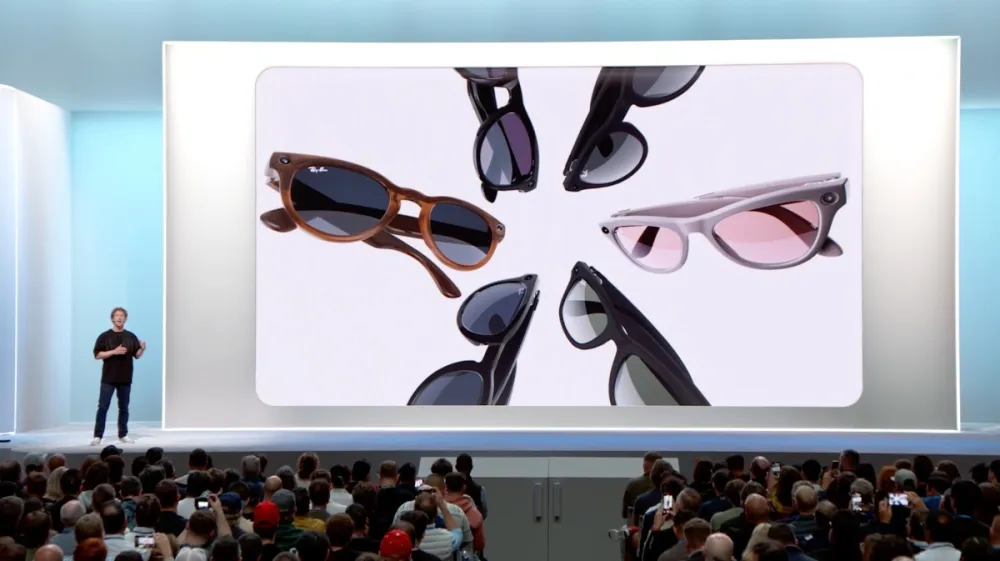

According to a report by The Information, Meta initially intended to equip its smart glasses with facial recognition capabilities, a feature that was later scrapped. However, the company is now revisiting the idea, considering the integration of this functionality into its next-generation smart glasses as part of an expanded environmental awareness system.

The feature was originally abandoned due to concerns over battery life—once activated, facial recognition drained the glasses’ power, reducing operational time to approximately thirty minutes. Nonetheless, sources indicate that improvements are underway, and Meta aims to significantly extend usage time to several hours in the model slated for release in 2026.

Beyond incorporating facial recognition into smart glasses to enable real-time AI-driven applications, Meta is reportedly exploring similar technology for smart headphones equipped with cameras. These devices could provide wearers with environmental cues, such as alerts about nearby obstacles or contextual information about nearby landmarks.

However, the rollout of such features could reignite privacy and data protection concerns. Internally, Meta is reportedly debating whether to include an indicator light on the smart glasses to signal when environmental awareness is active—an effort that may not be enough to quell growing public unease about surveillance and consent.

In a recent software update for its collaborative smart glasses with Ray-Ban, Meta has set the Meta AI assistant to be enabled by default. Users must disable the “Hey, Meta” wake word to deactivate the feature. Meta has also clarified that voice interactions may be used for training its AI models, a decision likely to attract further scrutiny over data use and user privacy.