Amid the rapid evolution of artificial intelligence technologies, experts are increasingly turning their attention to the vulnerabilities embedded within emerging interaction protocols. One such case involves the Model Context Protocol (MCP), an open standard introduced by Anthropic in late 2024. Designed to establish a universal method for linking large language models with external data sources and services, MCP aims to enhance their functionality by enabling seamless integration with supplementary tools.

MCP follows a client-server architecture. Clients such as Claude Desktop and Cursor communicate with various servers that grant access to specific capabilities. The protocol allows for the use of tools from multiple AI providers, facilitating dynamic switching between them and enabling more flexible and efficient data interaction. However, in implementing such an approach, researchers have uncovered potential vulnerabilities that could jeopardize the overall integrity and security of the system.

According to Tenable, the primary vector of concern lies in prompt injection attacks. For instance, if an MCP-integrated tool is granted access to an email service like Gmail, a malicious actor could send a message containing a covert instruction that the language model interprets as a command. This could result in the unauthorized forwarding of confidential emails to a controlled address.

Another significant risk stems from “poisoned” tool descriptions. When a large language model engages with these, hidden directives embedded within the descriptions may alter the tool’s future behavior — a tactic known as a rug-pull attack. In such scenarios, a tool may initially operate as expected, only to abruptly shift its logic following an update.

Additionally, threats have been identified involving cross-instrument interference. A server could intercept commands intended for another, substituting or modifying their execution. This opens the door to undetected data interception and systemic behavioral manipulation.

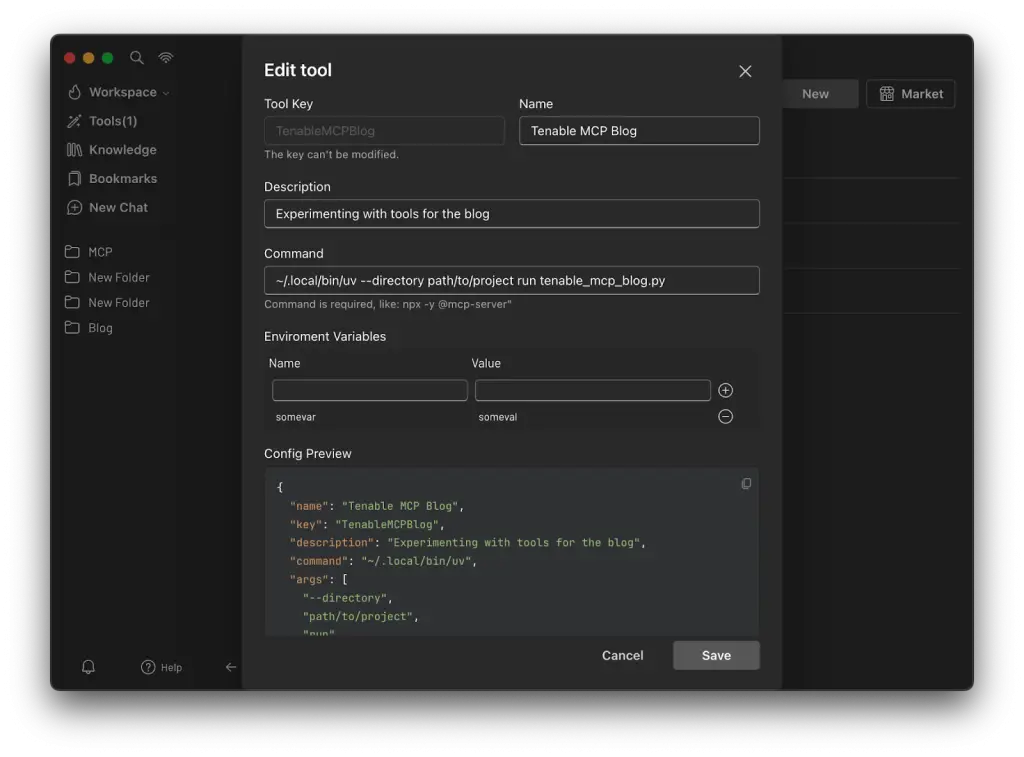

Tenable’s report highlights that these vulnerabilities could also be harnessed constructively. For example, one could develop a tool that logs all MCP function calls by recording information about the server, tool, its description, and the user’s original command. This could be achieved simply by embedding a description that compels the model to invoke the logging tool before executing any other instructions.

It is also feasible to transform a tool description into a kind of security filter — a mechanism that blocks the execution of unauthorized components. While most MCP hosts require explicit consent to run tools, some may be exploited ambiguously, especially when control is governed by descriptions and returned values. Given the inherently unpredictable behavior of language models, system responses to such embedded instructions may vary widely.

Overall, the study underscores the imperative for heightened vigilance in securing new protocols that extend the capabilities of AI. Their flexibility, while fertile ground for innovation, simultaneously paves the way for subtle and multifaceted risks that may be exploited for a range of purposes.