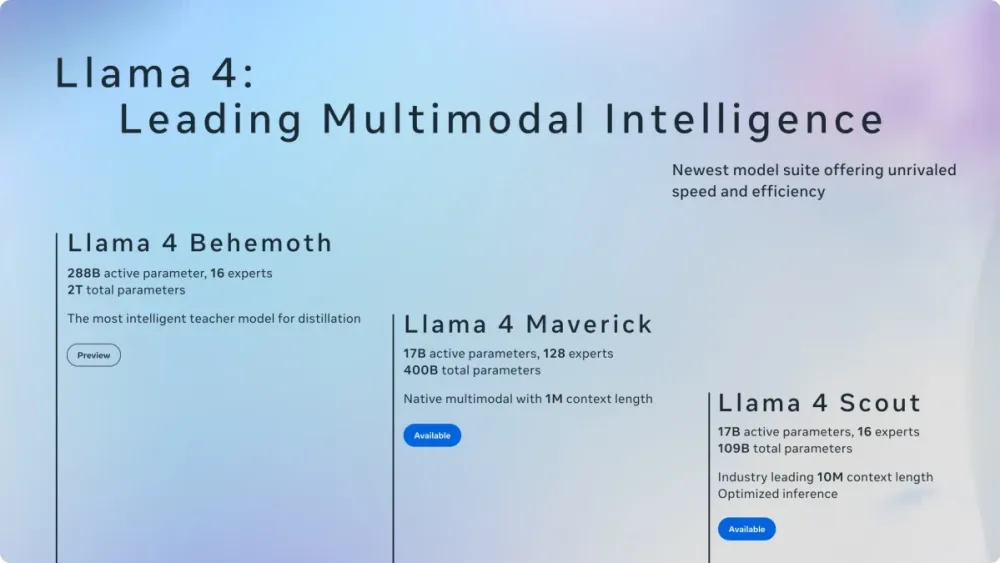

Following the recent announcement that Meta will host its inaugural LlamaCon developer event on April 29 (Pacific Time), the company has unveiled two variants of its latest large language model, Llama 4—namely, Llama 4 Scout and Llama 4 Maverick.

The Llama 4 Maverick model is tailored for mainstream applications, particularly digital assistants and conversational agents. In contrast, the more lightweight Llama 4 Scout is optimized for tasks such as document summarization, large-scale behavioral analysis, personalized interaction, and inference on extensive programming codebases.

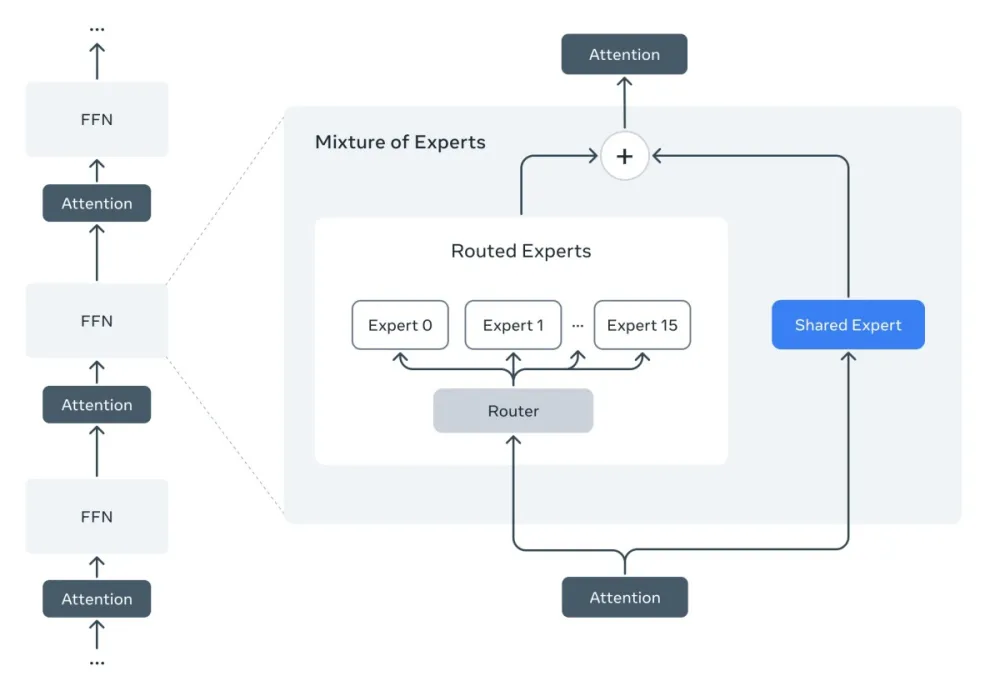

Both models boast a parameter size of 17 billion. Llama 4 Scout is designed to support inference across 16 specialized domains and offers multimodal capabilities, delivering lightning-fast performance. It can process up to 10 million tokens of contextual data and is optimized for deployment on a single GPU, making it highly adaptable to various network infrastructures.

Llama 4 Maverick, while similar in scale, supports inference across 128 specialized domains and has demonstrated performance surpassing OpenAI’s GPT-4o and Google’s Gemini 2.0 in areas such as code generation, inference speed, multilingual comprehension, long-context analysis, and visual benchmarks. Its coding and inference capabilities are also on par with DeepSeek v3.1.

Looking ahead, Meta has teased the forthcoming release of Llama 4 Behemoth, a model with an extraordinary 288 billion parameters, aimed at competing with the largest AI models in existence. Additionally, Llama 4 Reasoning, engineered to significantly enhance inference performance, is set to debut in May—with full details expected to be unveiled during LlamaCon.

Both Llama 4 Scout and Llama 4 Maverick are now available through the official Llama website and Hugging Face, and have been integrated into Meta’s AI infrastructure, including deployment within messaging features on WhatsApp, Messenger, and Instagram DMs.