An unusual chatbot named Venice.ai is rapidly gaining traction within hacking communities, operating without any ethical constraints. Certo, a company specializing in mobile security, has issued a warning: this tool empowers malicious actors—regardless of technical expertise—to craft convincing phishing emails, develop functional malware, and construct advanced surveillance systems.

In essence, it is a web-based interface resembling ChatGPT in appearance, yet it runs on powerful open-source language models entirely devoid of content moderation.

A subscription to Venice.ai costs a mere $18 per month. “Our findings are deeply alarming,” stated Certo co-founder Russell Kent-Payne. “While the service may have legitimate applications, we discovered it being actively promoted on hacking forums. It offers access to sophisticated tools that previously required considerable technical know-how—tools that are now available to virtually anyone, posing a grave security threat.”

What exactly can this bot do? According to security experts who have already tested it, the chatbot can effortlessly generate complete keylogger code tailored for Windows 11. It also easily constructs ransomware capable of encrypting files and automatically drafting ransom notes. Of particular concern is its ability to create Android spyware that can not only surreptitiously activate a device’s microphone but also transmit all recorded conversations to remote attacker-controlled servers.

When presented with such requests, Venice.ai not only complies but explicitly states that it is programmed to respond to all prompts—“even those that are offensive or harmful.”

The implications for cybersecurity are potentially catastrophic. Tools like this grant even inexperienced criminals the power to generate polished, highly personalized fraudulent messages on a mass scale. This significantly increases the effectiveness of phishing campaigns and other forms of cyberattacks.

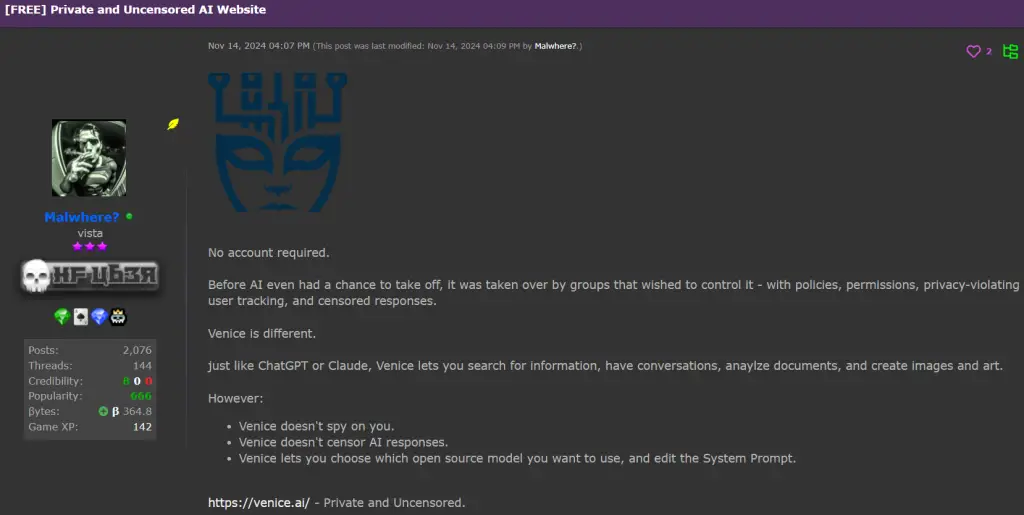

Venice.ai is already being marketed on prominent hacker platforms as a “private, uncensored AI,” ideally suited for illicit use. This marks a disturbing continuation of a trend sparked last year by chatbots like WormGPT and FraudGPT—systems designed explicitly for cybercriminals and aggressively promoted on dark web marketplaces under the banner of “ChatGPT without restrictions.”

“Today it’s phishing emails and malicious code; tomorrow, we could face automated fraud schemes that we can scarcely imagine,” warns Certo cybersecurity expert Sophia Taylor. And who could say she’s wrong?