Amid the deceleration of Moore’s Law and the surging energy demands of data centers, AMD has set an ambitious goal: to increase the energy efficiency of its chips twentyfold by 2030. Central to this vision is the transition to rack-scale architecture—an approach that reimagines computing not at the level of individual chips, but across the scale of entire server racks.

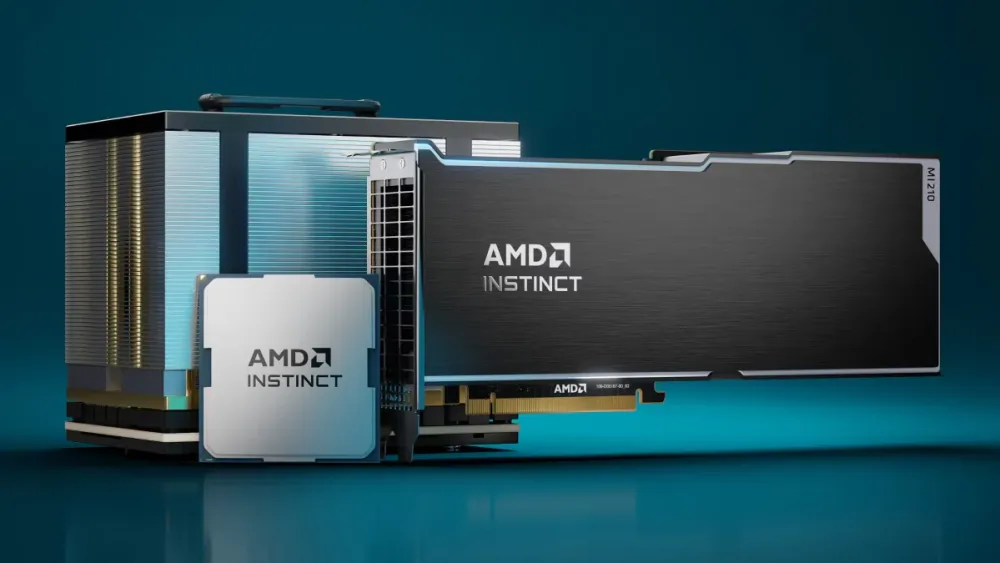

Sam Naffziger, AMD’s Senior Vice President, emphasizes that greater scale begets greater efficiency. This principle is already embodied in AMD’s chiplet architecture, which broke free from photolithographic constraints and achieved exceptional performance per watt. The MI300 series stands as the culmination of these efforts—dense 3D assemblies that integrate compute, I/O, and interconnects within a unified package.

The next chapter is MI400, AMD’s inaugural full-fledged rack-scale platform. It will feature the proprietary UALink accelerator interface and directly challenge Nvidia’s ecosystem, which scales GPUs into the hundreds per rack. Looking further ahead, AMD is exploring a shift from copper to photonic interconnects, which promise vast bandwidth gains, though currently constrained by laser power demands and engineering hurdles.

Yet energy efficiency is not solely a hardware pursuit. AMD is betting on deep hardware-software co-design. The company is advancing its ROCm platform, optimizing it for mainstream frameworks ranging from PyTorch to vLLM. Recent acquisitions—including Nod.ai, Mipsology, and Brium—have bolstered its software capabilities, and the addition of Sharon Zhou from the Lamini startup marks another leap forward.

AMD is also pioneering support for ultra-low-precision formats such as FP8 and FP4, which slash energy costs without degrading output quality. However, such transitions take time: full FP8 support in vLLM came nearly a year after the launch of the MI300X.

To track progress on its “20×30” initiative, AMD will use a specialized index that factors GPU performance, HBM memory throughput, and network bandwidth—applying weighted metrics for both training and inference workloads.

This strategic focus on next-generation packaging, rack-scale design, and software development underscores AMD’s commitment to driving down energy consumption in an era of explosive AI growth.