AI can generate code—but not always safely. A new study, set to be presented at the 2025 USENIX Security Symposium, reveals that large language models (LLMs), when generating code, frequently fabricate dependencies on non-existent libraries. This phenomenon, dubbed “package hallucination,” not only undermines code quality but also significantly heightens risks to the software supply chain.

The analysis examined 576,000 code samples produced by 16 different models, including both commercial and open-source systems. These samples contained over 2.23 million references to third-party libraries. Alarmingly, nearly 440,000 of them—approximately 19.7%—pointed to non-existent packages. The issue was especially prevalent in open-source models such as CodeLlama and DeepSeek, which exhibited an average false dependency rate of nearly 22%. In contrast, commercial models like ChatGPT showed a rate just above 5%, a disparity attributed to their more sophisticated architectures and fine-tuned optimization.

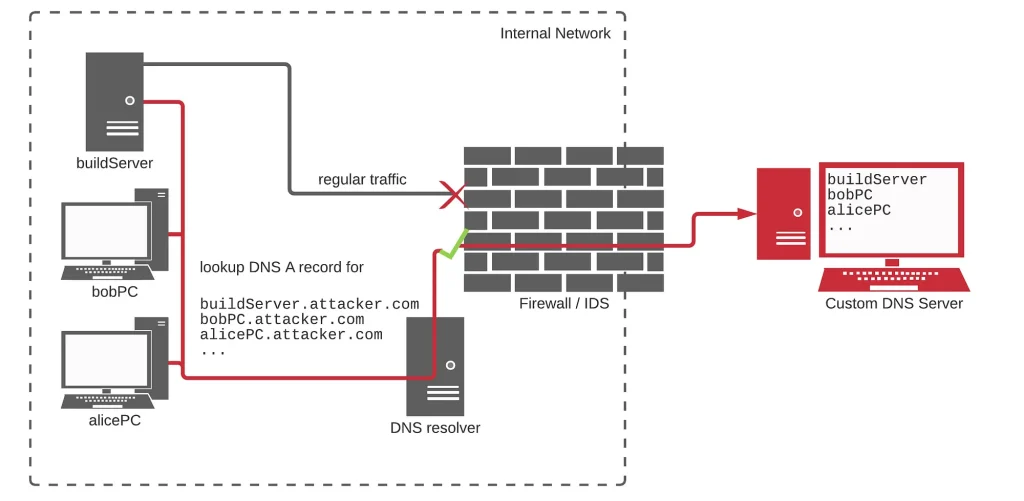

Perhaps most concerning is that these hallucinations were not random. Forty-three percent of false dependencies appeared in more than ten queries, and in 58% of cases, the same fictitious package recurred across ten iterations. This consistency makes them ideal targets for dependency confusion attacks, where malicious actors substitute legitimate components with harmful ones bearing identical names. If an attacker publishes a package with the same name in advance, their code could be automatically fetched and executed during a project’s build process—particularly if it appears to be a newer version.

Such attacks have already been demonstrated in real-world scenarios. In 2021, researchers successfully injected malicious code into the infrastructures of major corporations—including Apple, Microsoft, and Tesla—by exploiting dependency confusion. The vulnerability arises when systems prioritize higher version numbers, even if the source is unverified. Now, AI models may unwittingly suggest the names of such phantom packages, despite their absence from official repositories.

The study also found that JavaScript code is more susceptible to hallucinated dependencies than Python—21% versus nearly 16%. Researchers attribute this to the greater complexity and scale of the JavaScript ecosystem, as well as its more convoluted namespace conventions, which make it harder for models to “recall” package names accurately.

According to the authors, neither the size of an open-source model nor architectural variations within that category had a clear impact on hallucination rates. Instead, the quantity of training data, the rigor of fine-tuning, and the implementation of safety mechanisms played far more significant roles. Commercial LLMs like ChatGPT, which benefit from more robust training and safety frameworks, demonstrated far fewer instances of false dependencies.

As modern developers increasingly rely on third-party libraries to accelerate development and reduce manual coding, package hallucination emerges as a particularly perilous threat. Microsoft has previously predicted that within five years, up to 95% of all code will be AI-generated—making uncritical trust in AI recommendations a potential vector for widespread infrastructure compromise during the build process itself.

This looming threat underscores the urgent need for stronger safeguards in software build security, including manual verification of package names, restricted download sources, active monitoring of new package uploads to public repositories, and the integration of defensive mechanisms within dependency management systems.