The era of AI-powered code generation tools has not only simplified the lives of developers but also introduced new security risks. Chief among them is the phenomenon of “hallucinations”—the tendency of AI assistants to invent the names of non-existent software packages.

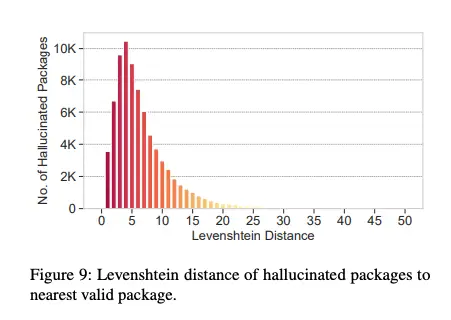

Researchers have previously observed that code-generation tools frequently suggest libraries that do not actually exist. According to the latest study, approximately 5.2% of package names proposed by commercial AI models are entirely fictitious. This figure is significantly higher—21.7%—for open-source models.

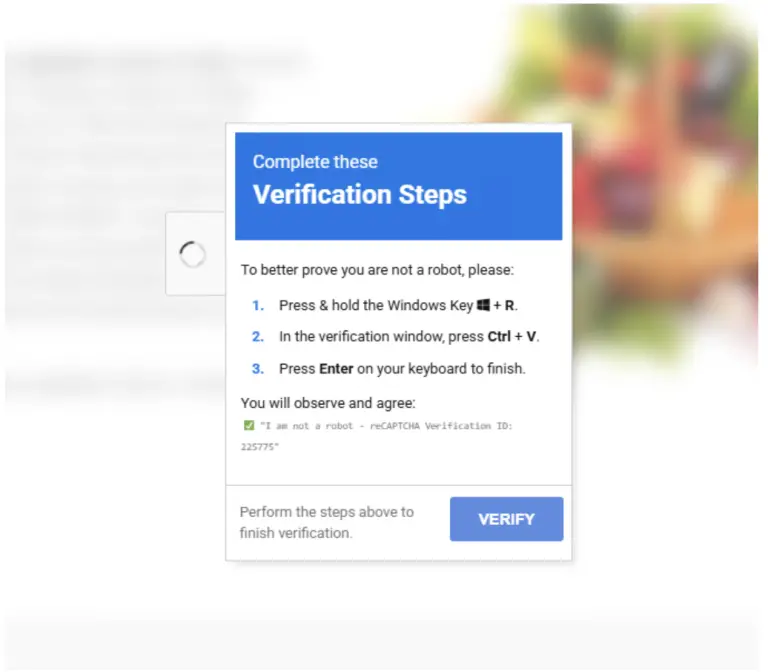

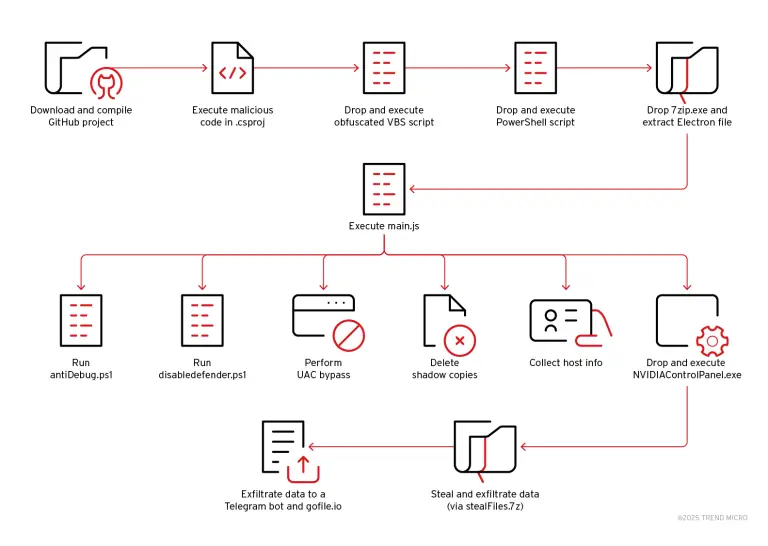

Ordinarily, an attempt to run code that references a non-existent package results in a harmless error. However, attackers have quickly discovered how to exploit this behavior. By uploading a malicious package with the same hallucinated name to a public repository like PyPI or npm, they ensure that the next time an AI assistant suggests it, the malware will be automatically downloaded during dependency installation.

Studies reveal that these hallucinated package names are not entirely random—some recur frequently, while others vanish entirely. For example, in repeated test queries, 43% of fictitious names reappeared each time, while 39% never appeared again.

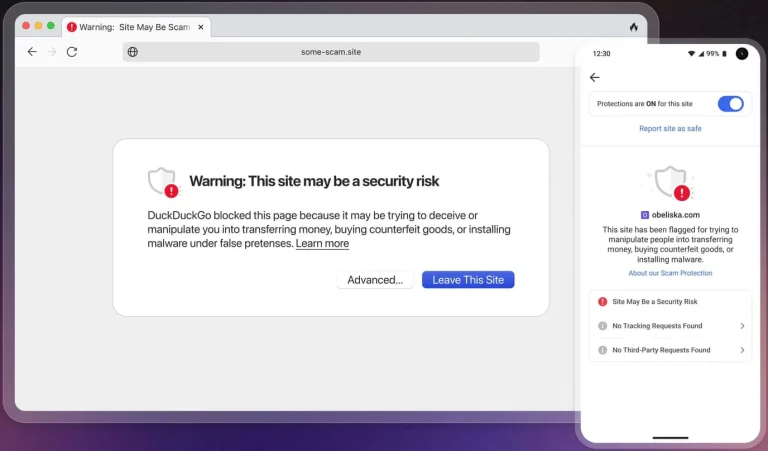

This tactic has been dubbed “slopsquatting,” a term inspired by “typosquatting,” where attackers register packages or domain names that closely resemble legitimate ones. Seth Larson, a security engineer at the Python Software Foundation, notes that the full scope of this issue remains unknown. He advises developers to thoroughly verify all outputs from AI assistants before applying them in real-world scenarios.

The reasons developers may attempt to install non-existent packages vary—from typographical errors to the use of internal naming conventions that inadvertently align with public repositories.

Feross Aboukhadijeh, CEO of Socket, argues that AI has become so deeply embedded in the development process that many developers now trust its suggestions blindly. This has led to a proliferation of “phantom” packages—names that appear plausible, yet do not exist. Alarmingly, these packages are often accompanied by convincing descriptions, fake GitHub repositories, and even blog posts designed to simulate authenticity.

Another emerging concern is AI-generated content appearing in search engine summaries. Frequently, platforms like Google recommend malicious or non-existent packages because they simply aggregate unverified content from repository pages, without any critical review.

A notable incident occurred in January, when Google Overview recommended the malicious package @async-mutex/mutex in place of the legitimate async-mutex. More recently, a hacker operating under the alias “_Iain” published detailed instructions on dark web forums for building a botnet using malicious npm packages—automated, in part, by ChatGPT.

The Python Software Foundation is acutely aware of the threat and is actively developing tools to defend the PyPI repository. The organization urges users to scrutinize package names and contents before installation, and recommends that large organizations rely on repository mirrors containing pre-vetted libraries to mitigate risk.