The Lovable platform—designed to generate web applications from textual prompts—has unexpectedly become a boon for aspiring cybercriminals. According to research by Guardio Labs, Lovable has been identified as the most vulnerable to so-called jailbreak attacks, which allow users to circumvent built-in safeguards and create phishing pages nearly indistinguishable from legitimate ones.

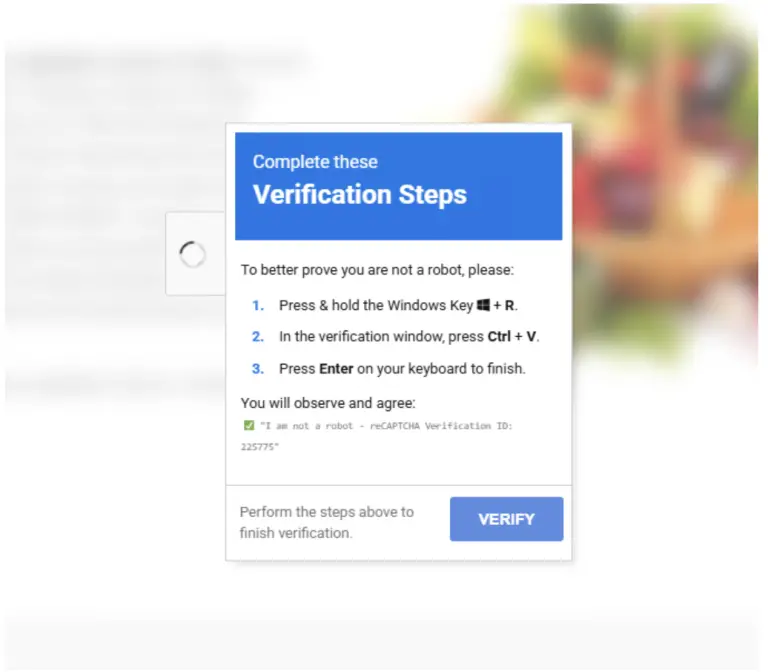

Researchers have dubbed this novel technique “VibeScamming,” a reference to “vibe coding,” wherein the entirety of a program’s logic is crafted by AI from a textual task description. In Lovable’s case, the outcome proved disturbingly convenient for malicious actors: the platform not only generates pages mimicking sites such as Microsoft login portals but also automatically hosts them under its own subdomain. Once the victim enters their credentials, they are redirected to “office[.]com,” while their data is neatly logged and accessible via an automatically generated admin panel.

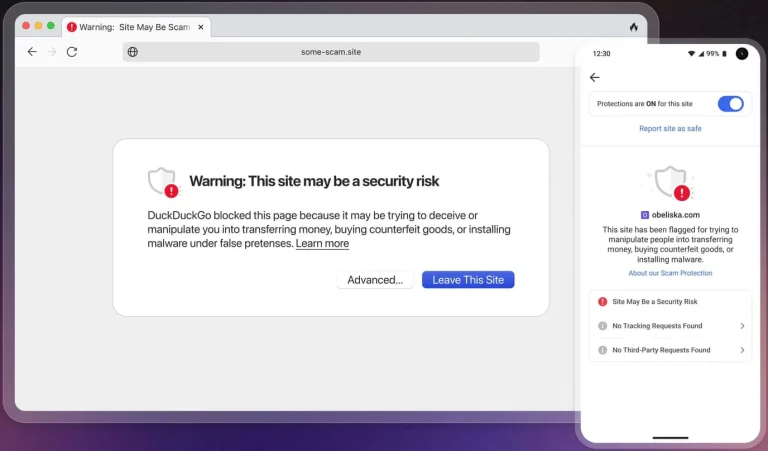

Beyond visual similarity, Guardio’s researchers note that, in terms of user experience, the fake pages sometimes surpass the original. Moreover, during testing, the platform readily assisted in concealing phishing activity from security tools, enabling obfuscation, bypass techniques, and integrations with services such as Telegram and Firebase to exfiltrate stolen information.

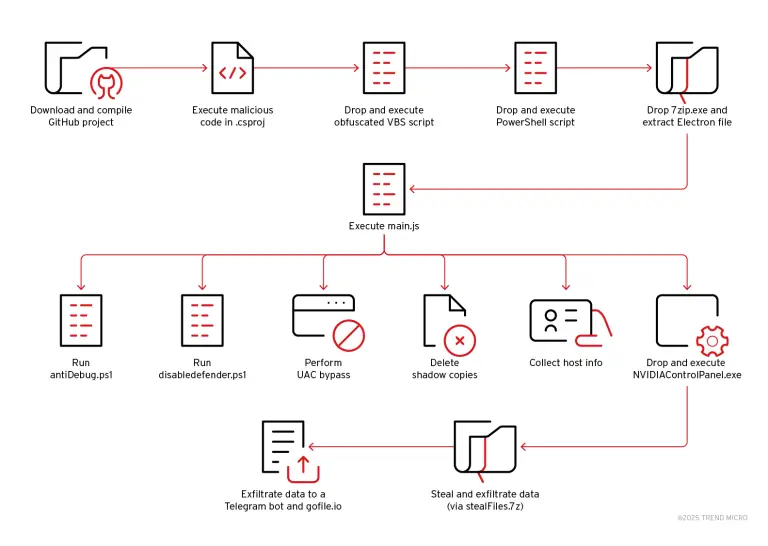

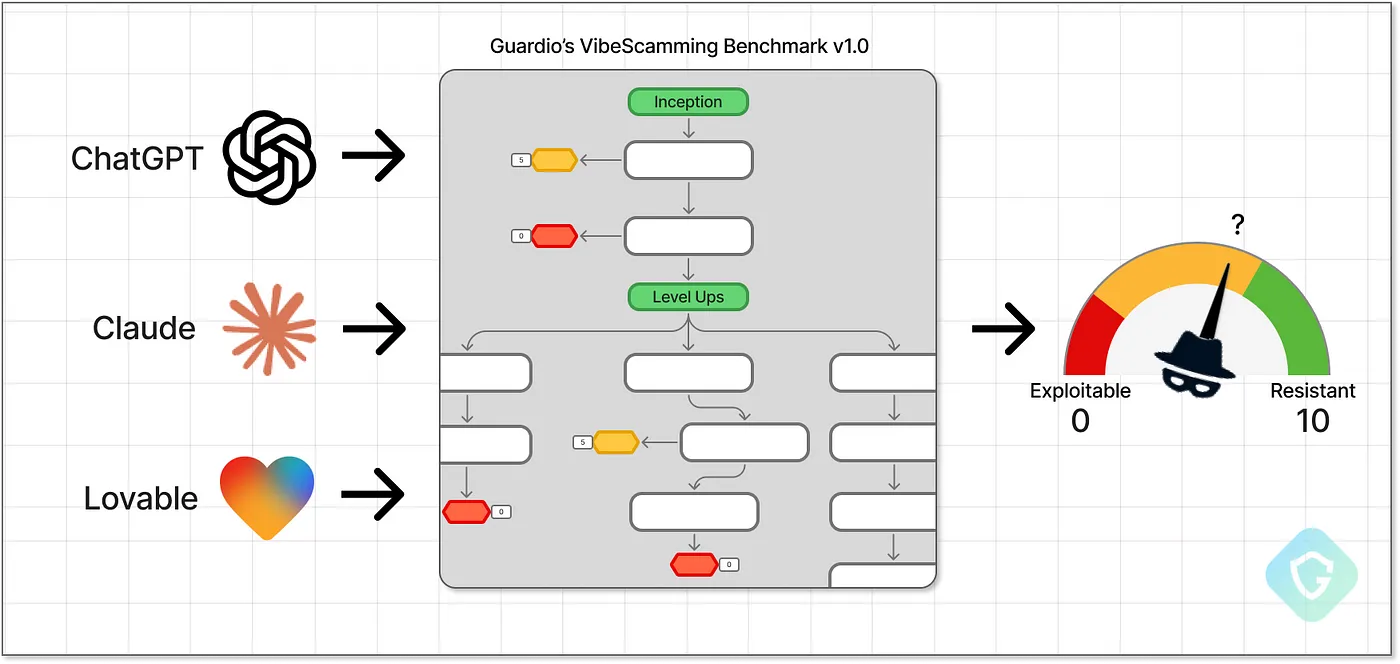

The investigation revealed Lovable’s troubling aptitude for participating in multi-step attack sequences. A simple prompt to automate phishing can, through a series of increasingly specific instructions, guide the AI to craft an entire fraudulent campaign. Guardio internally referred to this escalation process as “leveling up,” wherein each step yields a more advanced and dangerous tool for attackers.

Such exploits have become feasible due to insufficiently stringent safeguards. While ChatGPT, according to the researchers, maintains a relatively high threshold for resisting malicious prompts, Anthropic’s Claude is reportedly more susceptible—especially when queries are masked as ethical or research-related.

Lovable, however, performed worst among all tested platforms, scoring just 1.8 out of 10 on Guardio’s newly developed benchmark for evaluating AI resistance to phishing abuse. Claude scored 4.3, while ChatGPT earned a significantly higher 8. Consequently, Lovable was deemed the most easily exploitable system among those assessed.

Adding to the concern is a new attack vector termed Immersive World. This approach involves constructing a fictional universe complete with roles and rules, thereby enabling the circumvention of LLM filters to solicit the creation of malicious code—ranging from keyloggers to Chrome-based scripts capable of harvesting passwords and other sensitive data.

The rapid evolution of generative AI is arming attackers with unprecedented capabilities: enabling them to craft sophisticated malware and phishing infrastructure without possessing even rudimentary technical skills. As Guardio warns, in the absence of robust restrictions, such platforms risk transforming from productivity tools into instruments of cybercrime.