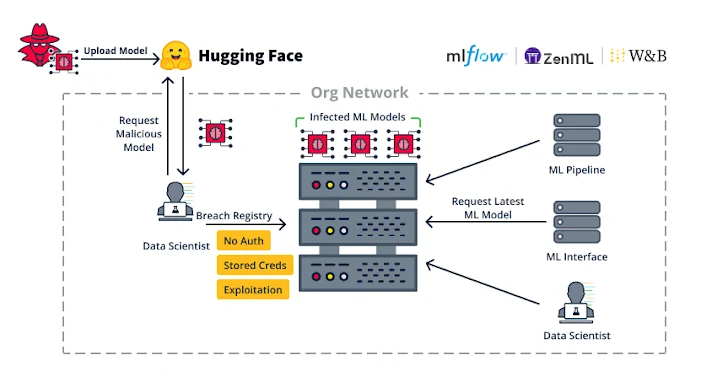

Cybersecurity researchers are sounding the alarm over significant risks associated with vulnerabilities in the software supply chain for machine learning (ML). Recently, over 20 vulnerabilities were identified across various MLOps platforms, which could be exploited for arbitrary code execution or the deployment of malicious datasets.

MLOps platforms enable the design and execution of machine learning model pipelines, storing models in repositories for future application use or API access. However, certain aspects of these technologies render them vulnerable to attacks.

Researchers from JFrog, in their report, highlight vulnerabilities stemming from both core formats and processes, as well as implementation flaws. For instance, attackers could exploit the automatic code execution feature when loading models, such as Pickle files, opening new avenues for attacks.

The danger also lies in the use of popular development environments like JupyterLab, which allows for code execution and output display in an interactive mode. The issue is that the output could include HTML and JavaScript, which are automatically executed by the browser, creating potential vulnerabilities for cross-site scripting (XSS) attacks.

One example of such a vulnerability was found in MLFlow, where insufficient data filtering leads to the execution of client-side code in JupyterLab, posing a security threat.

A second type of vulnerability relates to implementation deficiencies, such as the absence of authentication on MLOps platforms, allowing attackers with network access to execute code by leveraging ML Pipeline functions. These attacks are not merely theoretical—they have been employed in real-world malicious operations, such as the deployment of cryptocurrency miners on vulnerable platforms.

Another vulnerability involves container escape in Seldon Core, allowing attackers to go beyond code execution and spread within the cloud environment, gaining access to other users’ models and data.

All of these vulnerabilities could be exploited for infiltration and lateral movement within an organization, as well as the compromise of servers. Researchers emphasize the importance of ensuring the isolation and protection of the environment in which models operate to prevent the possibility of arbitrary code execution.

JFrog’s report emerged shortly after recent disclosures of vulnerabilities in other open-source tools, such as LangChain and Ask Astro, which could lead to data leaks and other security threats.

Supply chain attacks in the realm of artificial intelligence and machine learning are becoming increasingly sophisticated and difficult to detect, posing new challenges for cybersecurity professionals.