Recently, Micron unveiled a series of memory and storage products designed to accelerate the advancement of artificial intelligence (AI). Among these, the new HBM3E products have garnered significant attention. Micron previously announced the commencement of mass production for HBM3E, which will be used in Nvidia’s H200, with shipments slated to begin in the second quarter of 2024.

Girish Cherussery, Senior Director of Product Management at Micron, recently discussed the expansive market for high-bandwidth memory (HBM) and its various applications in today’s technological landscape. Cherussery stated that HBM, as an industry-standard packaged memory, is a transformative product that achieves higher bandwidth and energy efficiency in a smaller form factor, meeting the growing demands of increasingly complex large language models (LLMs) for memory.

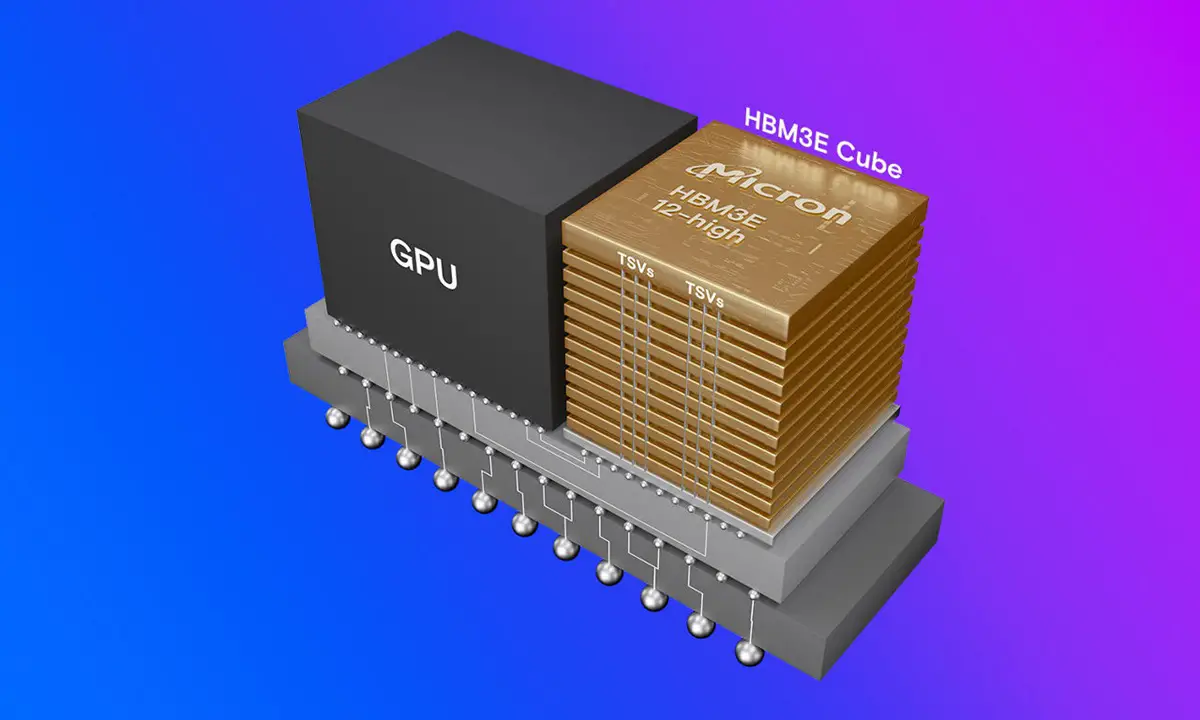

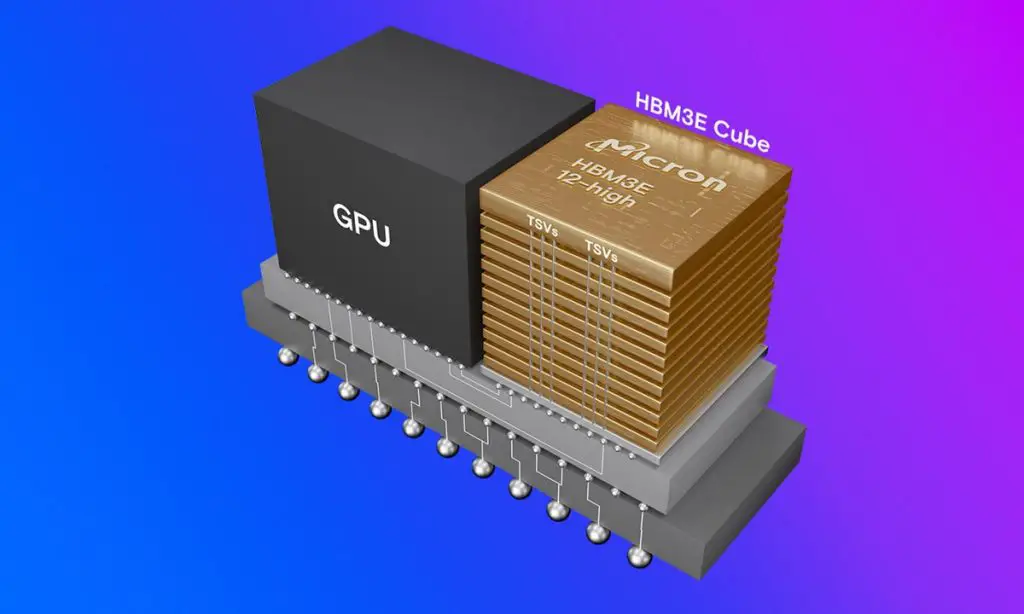

Micron’s current HBM3E products feature 16 independent high-frequency data channels, utilizing the 1β (1-beta) process, with a package size of 11mm x 11mm. They offer 24GB or 36GB capacities, with 8-layer and 12-layer 24Gb die stacks, delivering bandwidths exceeding 1.2TB/s and pin speeds surpassing 9.2GB/s. Micron has doubled the through-silicon vias (TSVs), reduced package interconnects by 25%, and lowered power consumption by 30% compared to competitors. Leveraging advanced CMOS technology innovations and sophisticated packaging techniques, Micron has provided structural solutions to improve thermal impedance, enhancing the overall thermal performance of the cubes. Combined with significantly reduced power consumption, these innovations offer customers HBM3E products with lower power usage and higher cooling efficiency.

Micron also plans to apply the 1β process to other products, including the LPDDR5X product line, driving substantial innovations in system architecture within heterogeneous computing environments.