Thousands of machine learning tools, including developments from major tech companies, have become accessible online, allowing anyone to interact with them and potentially expose confidential data. A security researcher shared his findings with 404 Media, raising concerns about the safety of such tools.

In his research, Reddit’s lead security engineer, Charan Akiri, noted that the leaks may include not only machine learning models but also training datasets, hyperparameters, and even raw data used to create the models. According to Akiri, the misconfiguration of these systems allows unauthorized individuals to download or run sensitive models and datasets. He emphasized that these platforms are intended solely for internal use.

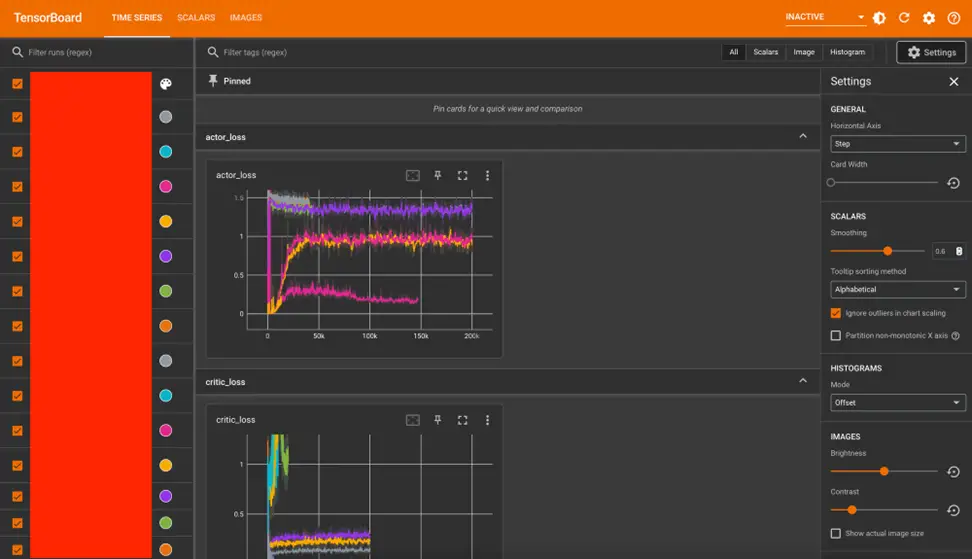

Among the vulnerable tools are MLflow, Kubeflow, and TensorBoard, widely used for training and deploying generative AI models in the cloud, as well as for visualizing their results. Due to improper configuration, many companies are unintentionally granting access to these tools, which could result in serious data leaks.

One such company is Renesas Electronics, a Japanese semiconductor manufacturer. The researcher was able to identify one of the tools belonging to Renesas through certificate data on the dashboard. After 404 Media contacted Renesas, the company promptly resolved the issue. However, no further comments were provided regarding the situation.

Akiri also pointed out that his work addressed only a small part of the problem; it is likely that many more companies have vulnerable tools whose owners have yet to be identified.

When representatives from the publication accessed several publicly available MLflow instances, they were able to create new runs and explore previous user experiments. According to Akiri, there may be around 5,000 vulnerable MLflow instances currently online.