Scientists from the University of North Carolina have developed a method called TPUXtract, which enables the reconstruction of neural network structures by analyzing the electromagnetic (EM) signals emitted by processors during operation. This approach allows cybercriminals or competitors to effectively replicate artificial intelligence models and their data.

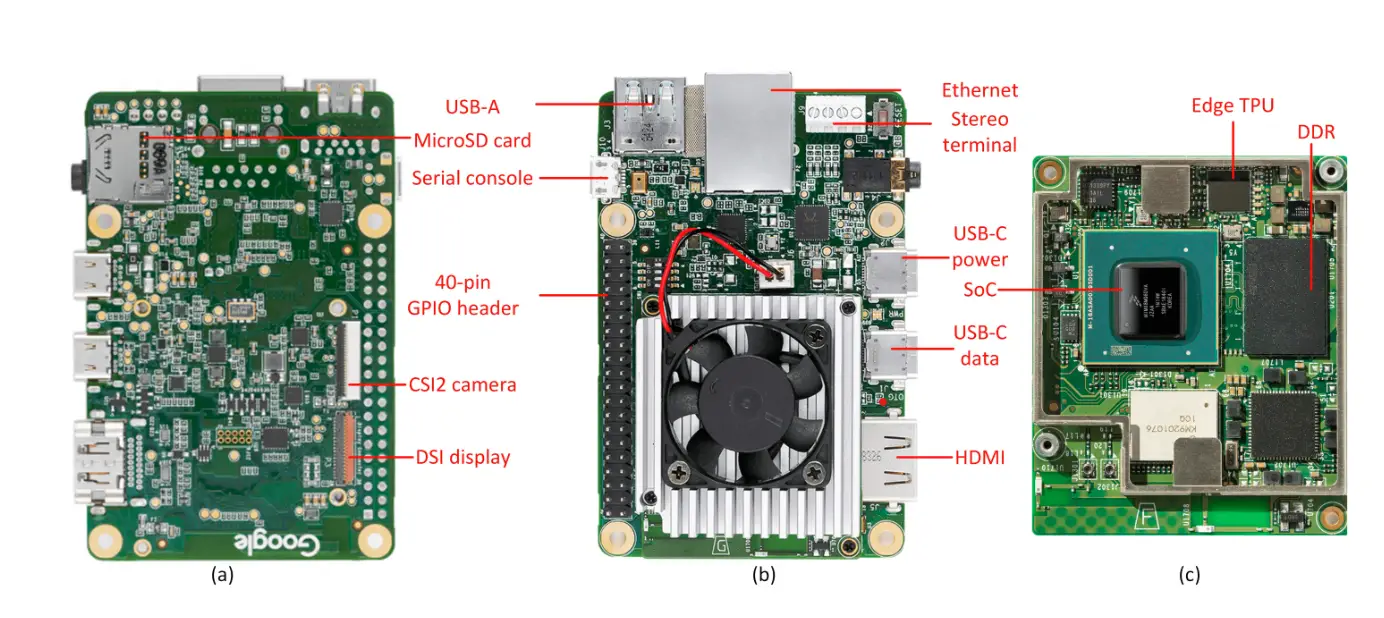

The method relies on measuring EM signals emanating from the Google Edge TPU — a specialized processor designed for machine learning tasks. Using sophisticated equipment and an “online template creation” technique, the researchers achieved a remarkable 99.91% accuracy in recovering the hyperparameters of a convolutional neural network — the critical settings that define the model’s architecture and behavior.

The experiment was conducted on the Google Coral Dev Board, a single-board computer utilized for AI applications in IoT devices, medical instruments, and automotive systems. The researchers recorded EM signals generated by the processor as it processed data, subsequently creating templates to describe each layer of the network.

The analysis proceeded in stages. First, the scientists identified markers indicating the commencement of data processing. They then reconstructed the signatures of individual network layers. By simulating thousands of hyperparameter combinations, the researchers matched these with real-world data, methodically piecing together the network’s structure step by step.

Remarkably, the team managed to reconstruct a neural network — which might otherwise take weeks or months to develop — in just a single day. However, the process demands significant technical expertise and expensive equipment, which currently limits its widespread application. Nonetheless, the researchers note that rival companies could adapt this technology to cut costs and bypass prolonged development cycles.

Beyond intellectual property theft, the TPUXtract method could also be employed to identify vulnerabilities in widely used AI models. Furthermore, when combined with other parameter-recovery techniques, this approach could facilitate the complete replication of AI systems, including their configurations and structures.

To defend against such attacks, the researchers propose introducing “noise” into the data analysis process. For example, employing dummy operations or randomizing the sequence of network layers during computation would complicate analysis and render the reconstruction of models significantly more laborious.