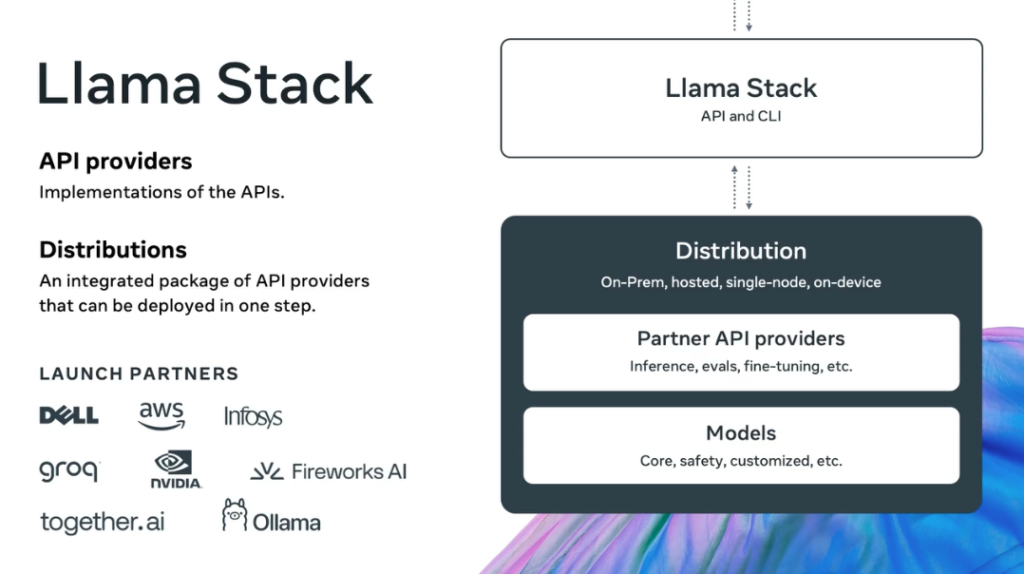

A critical vulnerability was recently discovered in Meta’s Llama LLM framework, allowing attackers to execute arbitrary code on the llama-stack inference server. The vulnerability, identified as CVE-2024-50050, has been assigned a CVSS score of 6.3, though Snyk rated it as a critical 9.3.

The issue stems from the deserialization of untrusted data within the Llama Stack component, which defines API interfaces for developing AI-based applications, including those leveraging Meta’s Llama models. The vulnerability arises from the use of the Python library pickle, which can lead to arbitrary code execution when malicious data is loaded.

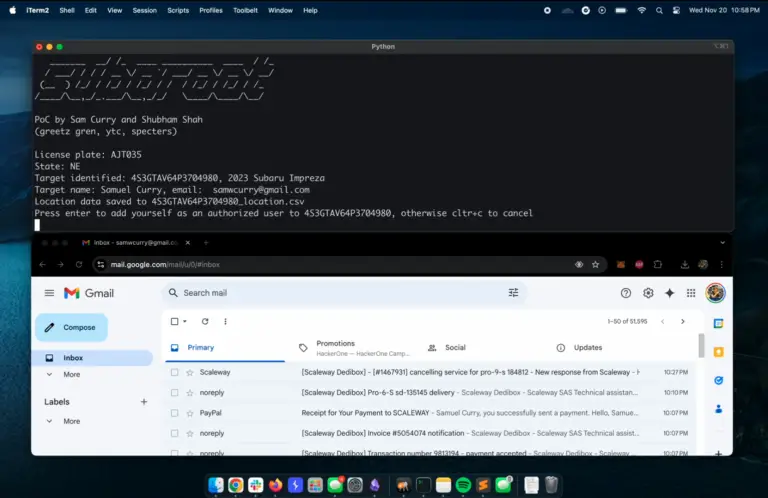

As Avi Lumelsky, a security researcher at Oligo Security, notes, “If an attacker gains network access to a ZeroMQ socket, they can send a specially crafted object, which is then deserialized using the unsafe recv_pyobj function, enabling arbitrary code execution on the vulnerable host.”

Meta addressed the vulnerability on October 10, 2024, in version 0.0.41, replacing the insecure pickle serialization format with a more secure JSON-based alternative. The issue was also mitigated in the pyzmq library, which provides access to ZeroMQ. The vulnerability was disclosed through responsible disclosure protocols on September 24, 2024.

Notably, this is not the first instance of such vulnerabilities in AI frameworks. In August 2024, Oligo Security reported a vulnerability in TensorFlow Keras, which allowed attackers to bypass protections of CVE-2024-3660 and execute arbitrary code using the marshal module.

The Llama incident underscores the critical need for heightened security in AI frameworks. As technology advances, the scale and impact of such vulnerabilities grow increasingly significant, demanding robust safeguards and proactive mitigation strategies.