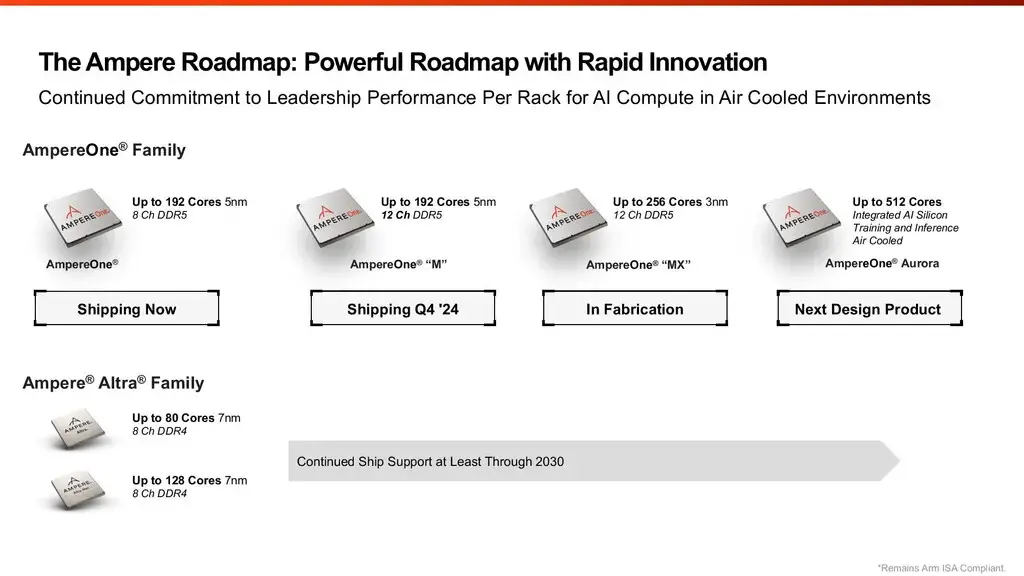

Ampere has announced an updated product roadmap, unveiling the future product AmpereOne Aurora from the AmpereOne series.

Ampere stated that over the past few years, it has been dedicated to developing innovative technologies for various types of cloud workloads and infrastructures. Given the rapid convergence of general and artificial intelligence (AI) workloads into AI computing in the cloud, future platforms must include the following features:

- Efficiency: No compromise on industry climate goals.

- Air-cooling: Deployable in all existing data centers.

- Integration: AI acceleration must be directly implemented within the SoC.

When these requirements are combined with Ampere’s technologies, including custom cores, proprietary mesh, and chiplet interconnects, and further enhanced with built-in AI acceleration, the result is the revolutionary AmpereOne Aurora. Its key features include:

- A total of 512 custom cores, more than three times the existing AmpereOne processors.

- A scalable AmpereOne Mesh that allows seamless connectivity for all types of computing.

- The first-time integration of Ampere AI IP with HBM memory directly within the chip package.

Ampere claims this product can scale across a range of AI inference and training use cases, boasting powerful AI computing capabilities suitable for workloads such as RAG and vector databases. It delivers leading per-rack performance for AI computing. More importantly, AmpereOne Aurora can utilize air cooling, meaning it can be deployed in any existing data center worldwide.

Looking ahead to the fourth quarter of 2024, Ampere plans to launch the AmpereOne M series, maintaining a core count of 192 but introducing it on a 12-channel DDR5 platform. By 2025, Ampere will present an enhanced version, increasing the processor core count to 256 and switching to a 3nm process to further boost performance.