AMD, Broadcom, Cisco, Google, Hewlett Packard Enterprise, Intel, Meta, and Microsoft announced the formation of an alliance to establish a new industry standard aimed at enhancing high-speed, low-latency communication for scaling AI systems within data centers.

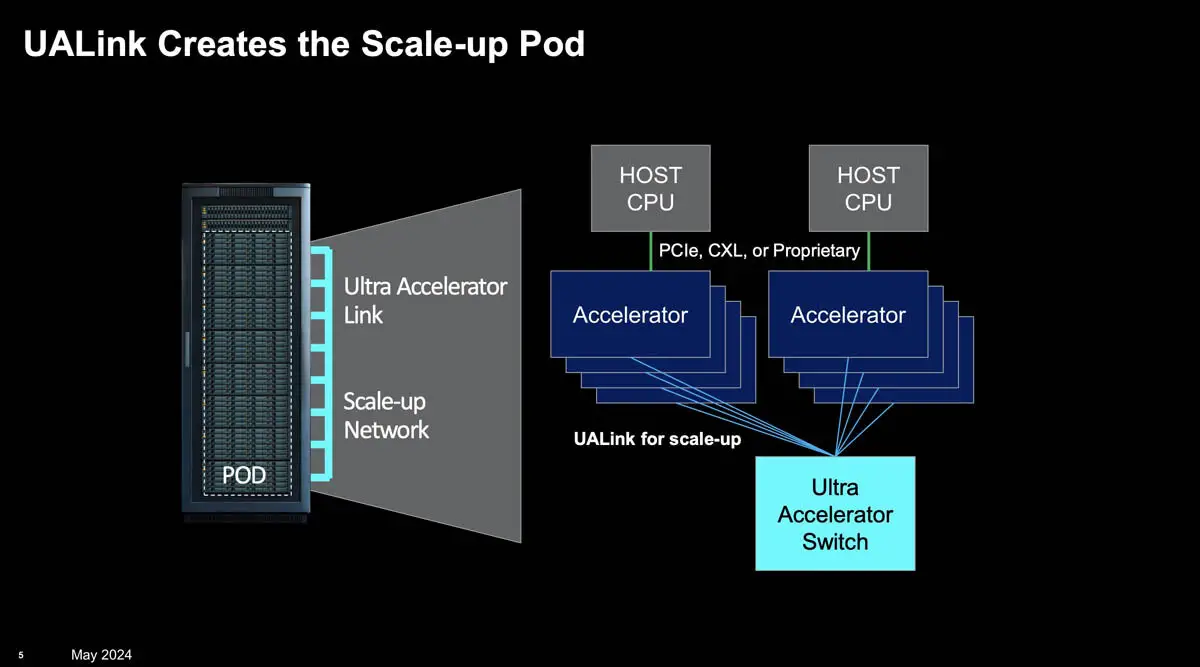

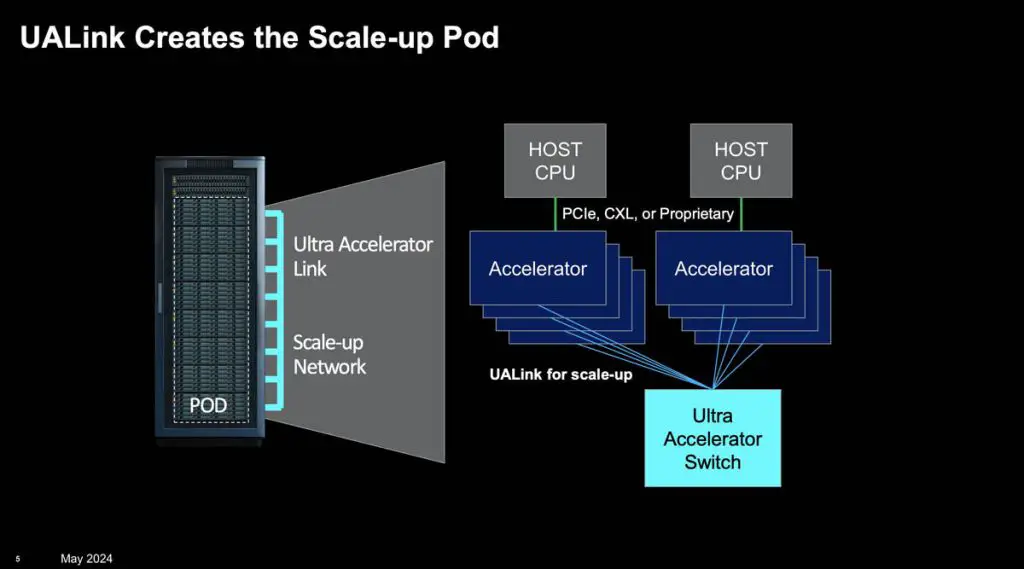

This founding group, known as the Ultra Accelerator Link (UALink), will define and develop an open industry standard to facilitate more efficient communication among AI accelerators. By creating an open interconnect standard, UALink will enable system OEMs, IT professionals, and system integrators to establish a more integrable, flexible, and scalable pathway for AI-connected data centers. With their extensive experience, these founding members will support the creation of future large-scale AI and high-performance computing (HPC) data centers, enhancing the performance of next-generation AI/ML clusters through open standards, efficiency, and robust ecosystems.

The UALink alliance is expected to be established in the third quarter of 2024, offering the UALink 1.0 specification to companies joining the alliance. The UALink 1.0 specification supports connecting up to 1,024 AI accelerators, allowing direct load and store operations between the memory of GPUs and other accelerators within a compute cluster (Pod). In essence, it aims to better interconnect numerous AI accelerators, improving the efficiency of executing large-scale computational tasks.

Conspicuously absent from the UALink alliance is NVIDIA, the leading AI accelerator supplier. Many believe the creation of the UALink alliance is intended to challenge NVIDIA’s dominance in the AI chip sector, especially since NVIDIA has its own interconnect communication protocol, NVLink, making it unlikely to support a competitor’s technical standards.