Researchers at Palo Alto Networks have discovered that large language models (LLMs) can be easily leveraged to generate novel variations of malicious JavaScript code capable of evading detection mechanisms.

According to experts, while LLMs are not capable of developing malware from scratch, they can be employed to rewrite and camouflage existing code, significantly complicating its identification. Cybercriminals utilize LLMs to perform transformations that render malicious code more natural in appearance, making it challenging to classify as harmful. Such alterations can degrade the effectiveness of malware analysis systems, leading them to misclassify malicious scripts as benign.

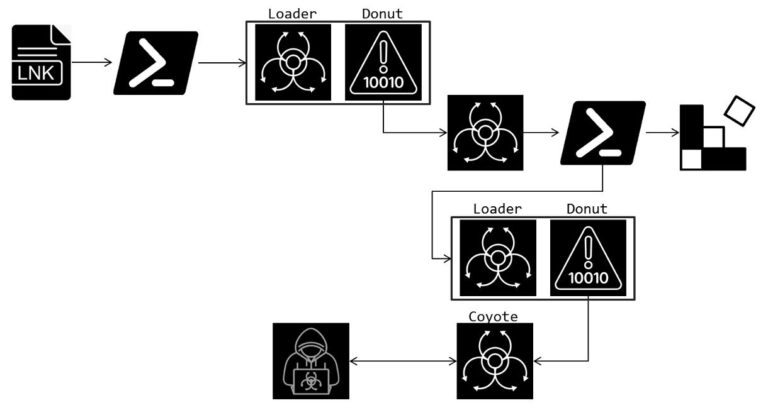

Despite the implementation of safety measures by LLM developers, attackers continue to exploit alternative tools, such as WormGPT, to craft phishing emails and new malware strains. Unit 42 experts demonstrated how LLMs can iteratively rewrite samples of malicious code, bypassing detection models such as Innocent Until Proven Guilty (IUPG) and PhishingJS. This process resulted in approximately 10,000 JavaScript variants that retained their malicious functionality while evading classification as harmful.

The modifications employed included renaming variables, splitting strings, injecting extraneous code, removing spaces, and complete reimplementation of functions. A greedy algorithm successfully altered the classification of the malicious code to “safe” in 88% of cases.

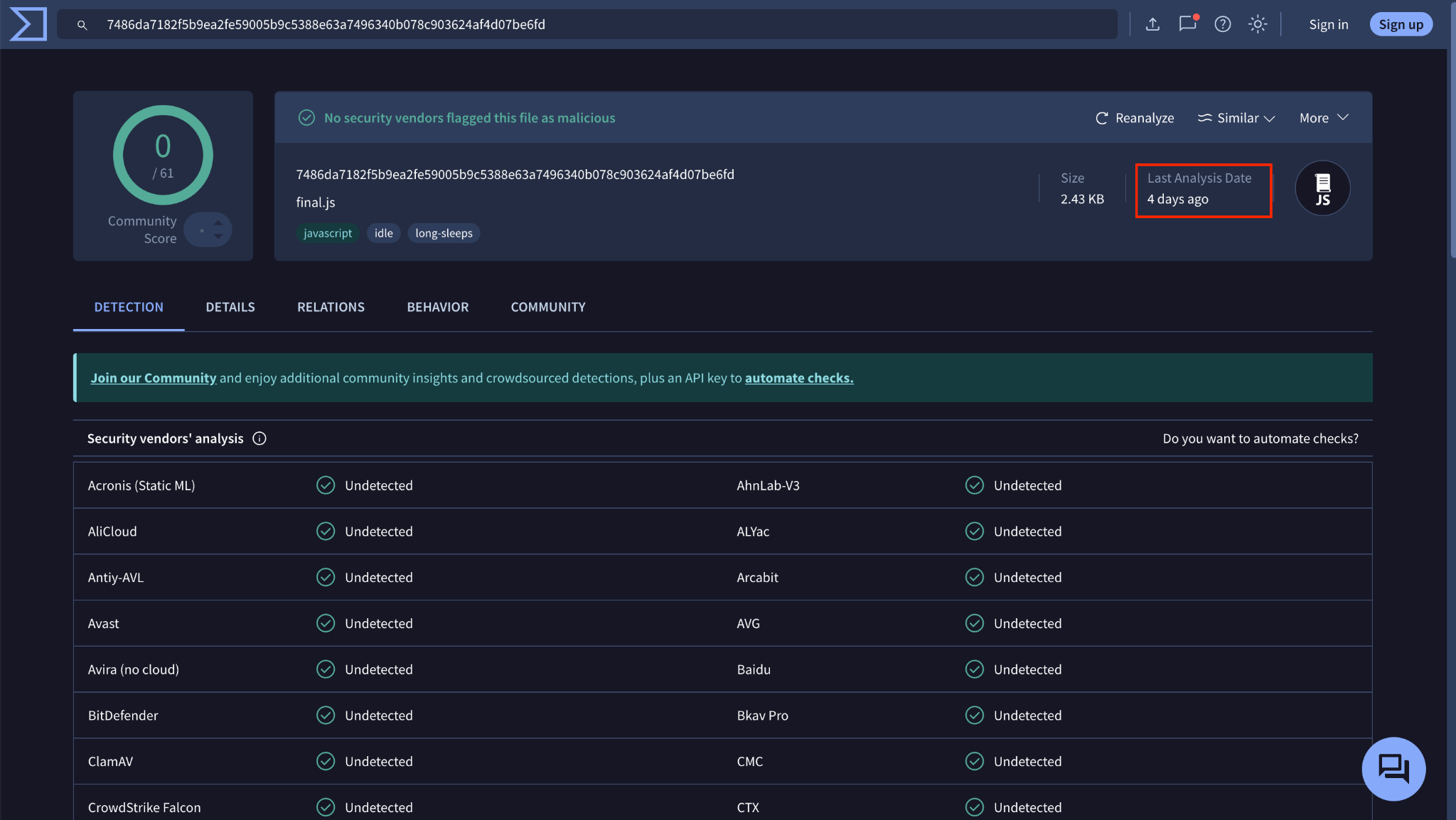

These transformed scripts also bypassed malware analyzers on platforms like VirusTotal, highlighting their advanced level of obfuscation. Notably, the changes made using LLMs appeared more natural compared to obfuscation techniques from libraries like obfuscator.io, which often leave easily detectable traces.

Although OpenAI blocked more than 20 operations in October 2024 that exploited its platform for reconnaissance, vulnerability research, and other illicit purposes, cybercriminals continue to find ways to misuse neural network models for malicious ends.

The application of generative AI has the potential to dramatically increase the volume of malware, yet the same approach can be utilized to enhance machine learning models, strengthening their resilience against threats.

Thus, the expanding capabilities of AI present both challenges and solutions: technologies used to obscure malicious code can also serve as tools to fortify cybersecurity when applied to develop more robust detection systems.